Are you looking at identifying Long-Running Azure Data Factory pipelines? Or maybe you are trying to send alerts when a pipeline has been running for hours? This blog post will help you do both.

You don’t need to create additional activities to identify Azure Data Factory pipelines that have been running for longer than expected. Instead, you can use the built-in logs.

Identify long-running Azure Data Factory workloads will help you locate unusual performance in your data movement and minimize costs.

Table of Contents

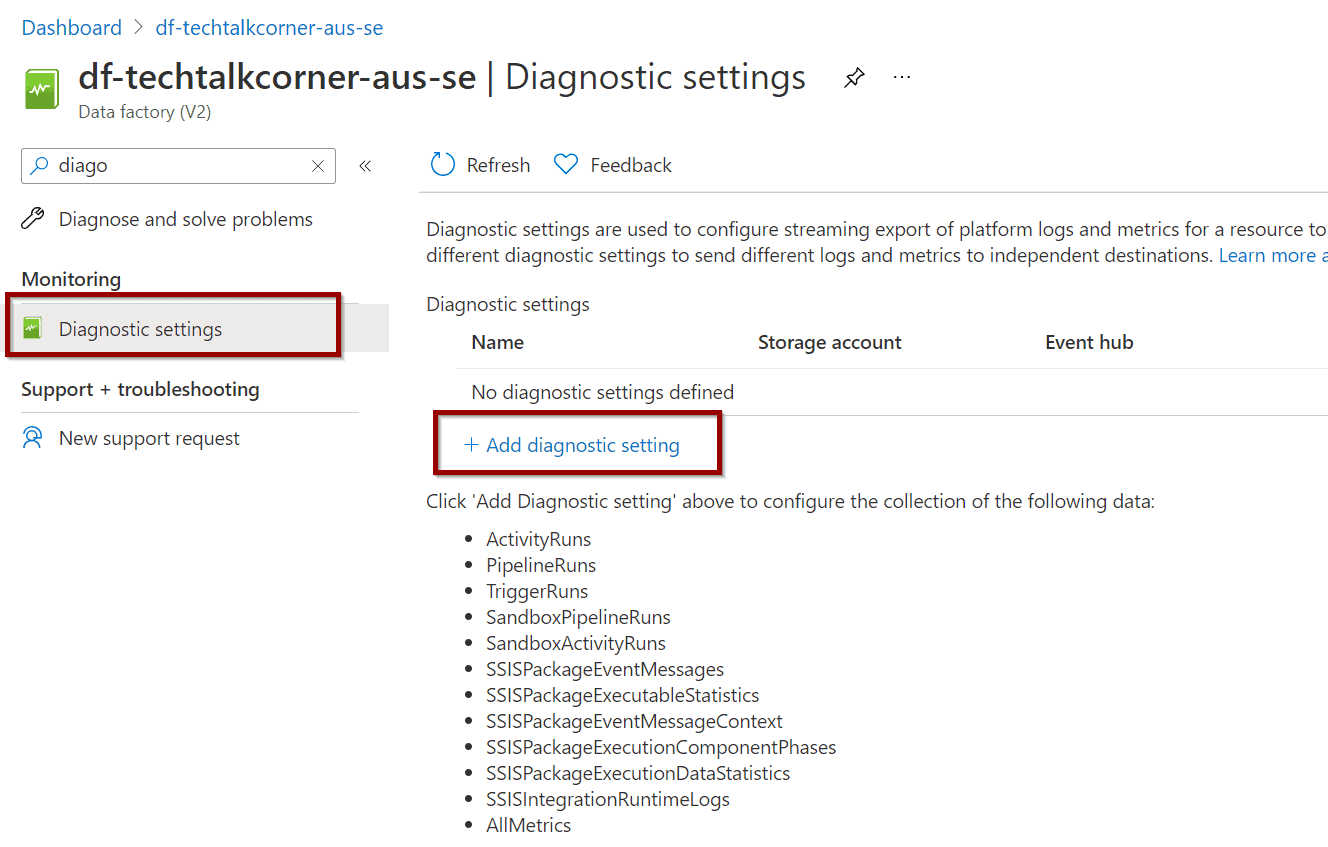

Enable Azure Log Analytics for Azure Data Factory

First, find the diagnostic settings in your Azure Data Factory.

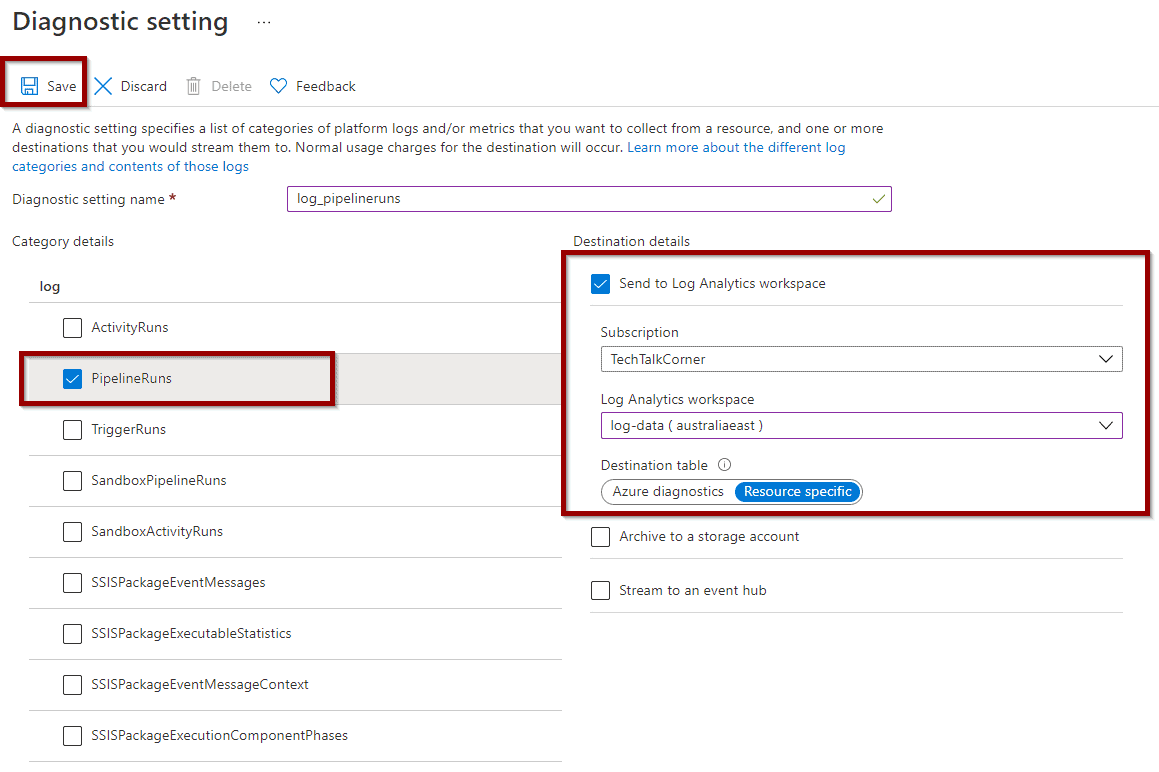

There are many logs options. To identify Long-Running Azure Data Factory pipelines, only enable pipelines runs.

If you are trying to turn on alerts for Long-Running Azure Data Factory activities, you can enable the ActivityRuns option.

Switching on all the options translates to an increase in the ongoing cost of your Azure Log Analytics. However, only turning on PipelineRuns will keep the ongoing cost low.

As you can see above, you can also send the logs to storage accounts and event hubs.

Don’t forget to save the options.

Identify Long-Running Azure Data Factory Pipelines

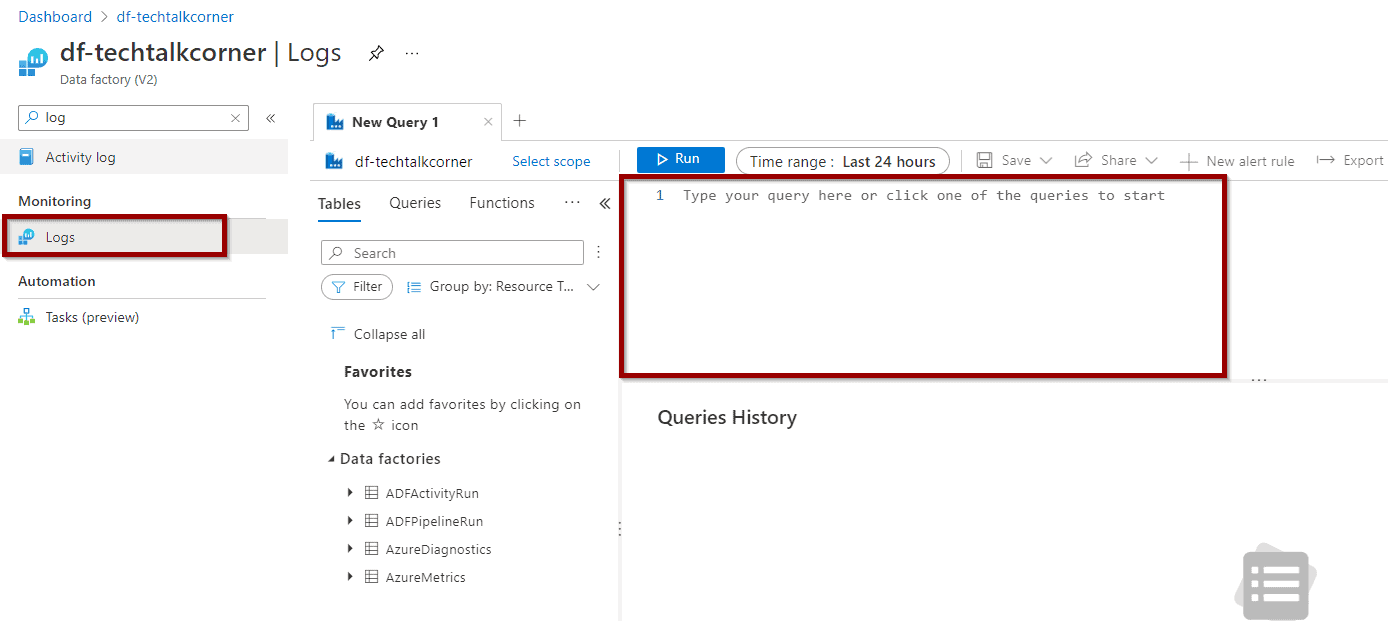

Next, you need to find the Logs option in Azure Data Factory to start writing queries and identify long-running Azure Data Factory pipelines.

Azure Log Analytics uses Kusto as the coding language, which is case sensitive.

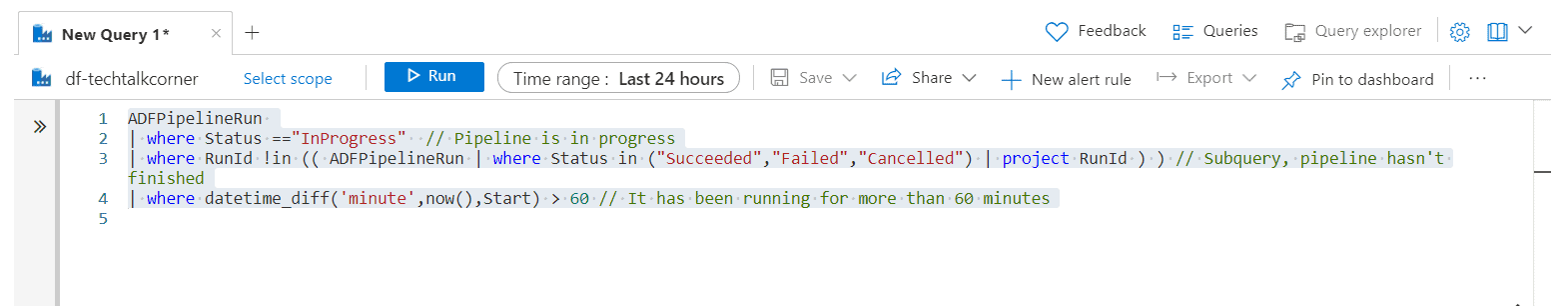

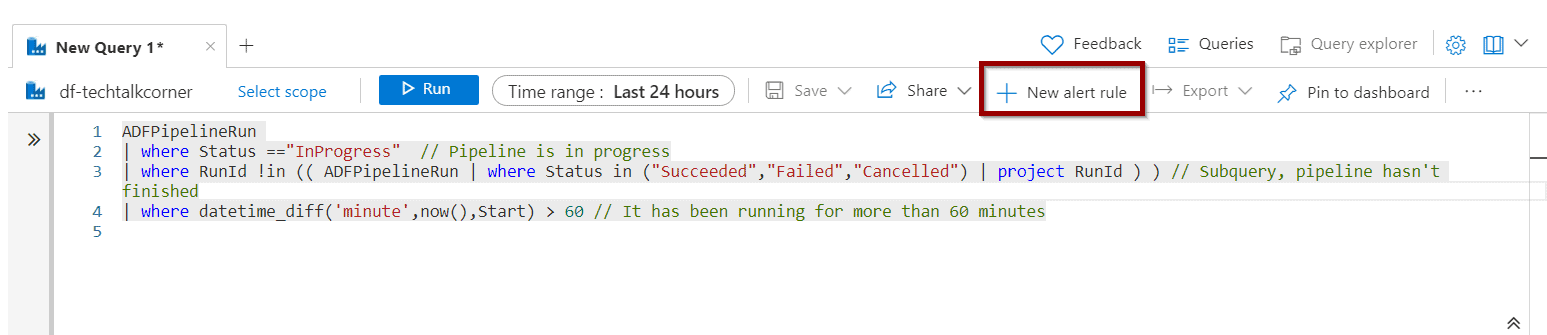

The following query helps you identify the Azure Data Factory pipelines that have been running for more than 60 minutes. Copy, paste and modify the following query to identify Long-Running Azure Data Factory pipelines.

ADFPipelineRun

| where Status =="InProgress" // Pipeline is in progress

| where RunId !in (( ADFPipelineRun | where Status in ("Succeeded","Failed","Cancelled") | project RunId ) ) // Subquery, pipeline hasn't finished

| where datetime_diff('minute',now(),Start) > 60 // It has been running for more than 60 minutes

You can save the query, pin it to the dashboard or even use it in a workbook as described in this blog post about Log Analytics Monitor in Azure Data Factory.

Create Alerts for Long-Running Azure Data Factory Pipelines

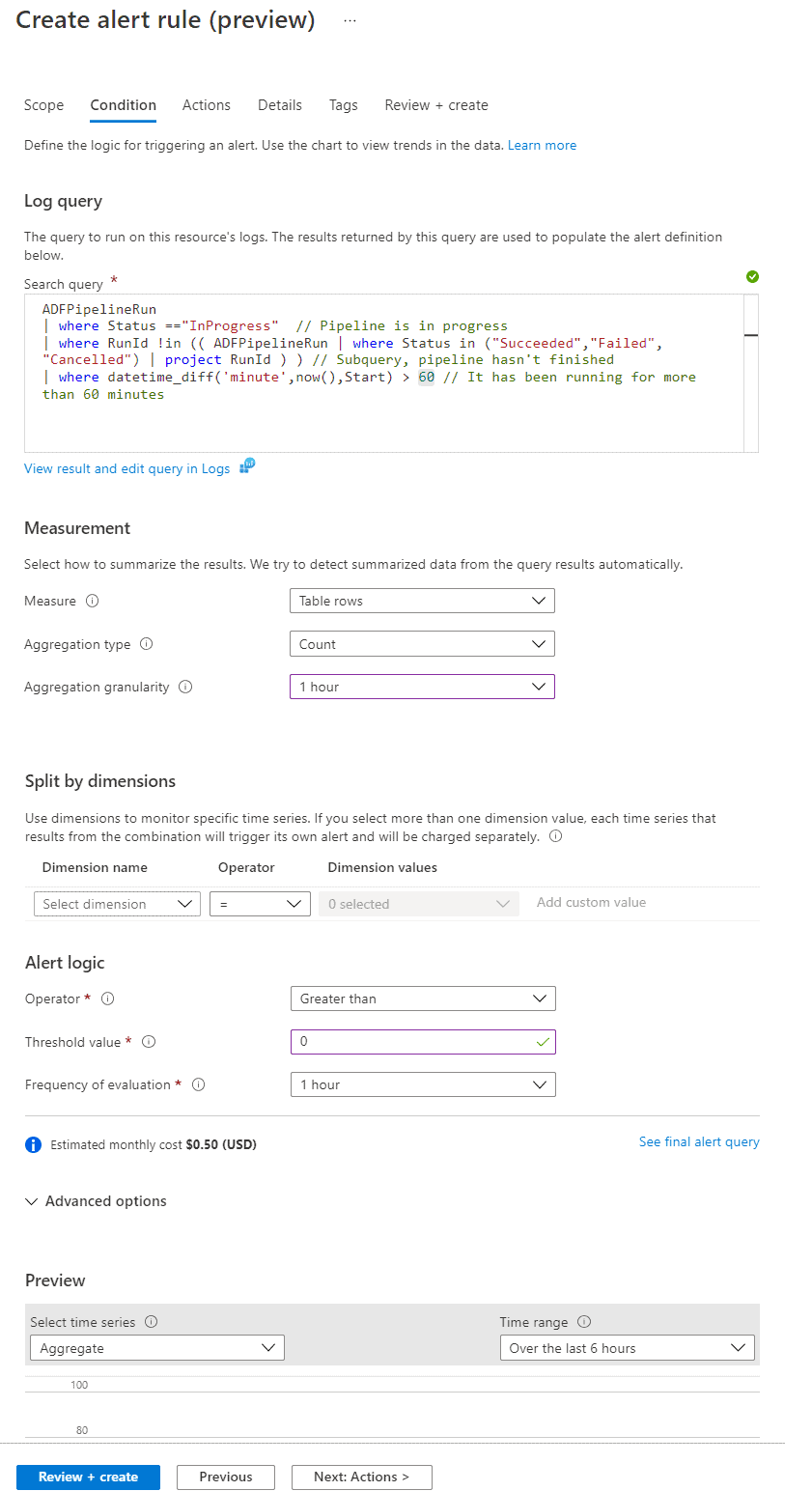

Finally, if you want to create alerts for Long-Running Azure Data Factory pipelines, click the New Alert Rule option.

If you are trying to identify Long-Running Azure Data Factory pipelines running for more than 1 hour, you can evaluate the alert every hour.

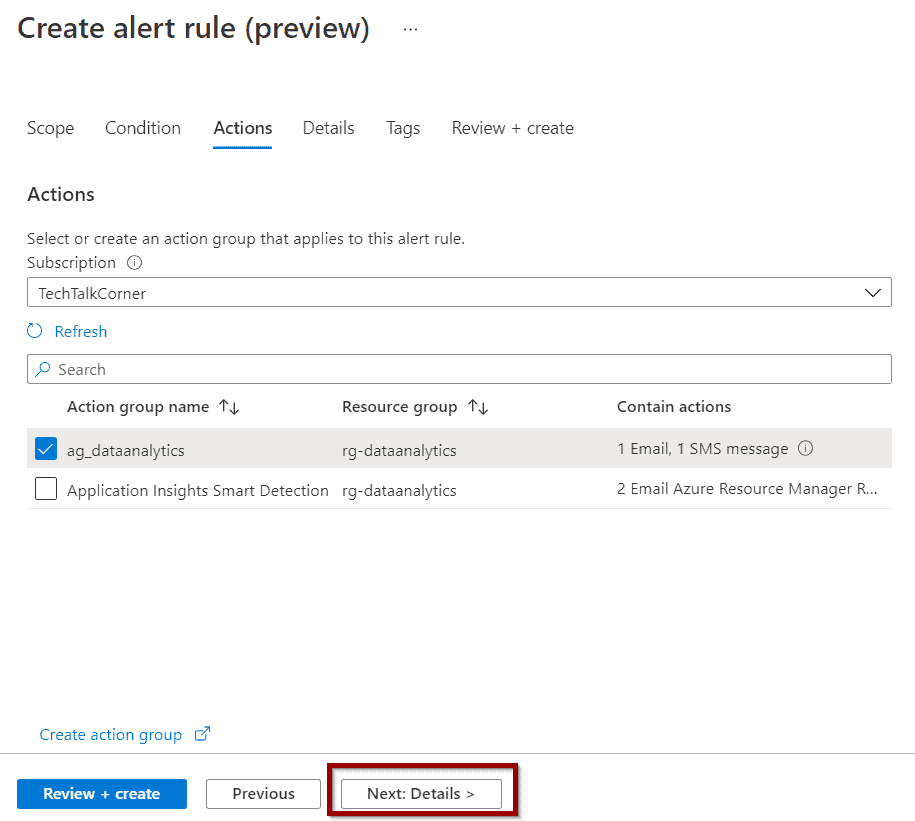

Select the action group. If you need to create one, check this blog post dedicated to alerts and how to create an action group for Azure Data Factory alerts.

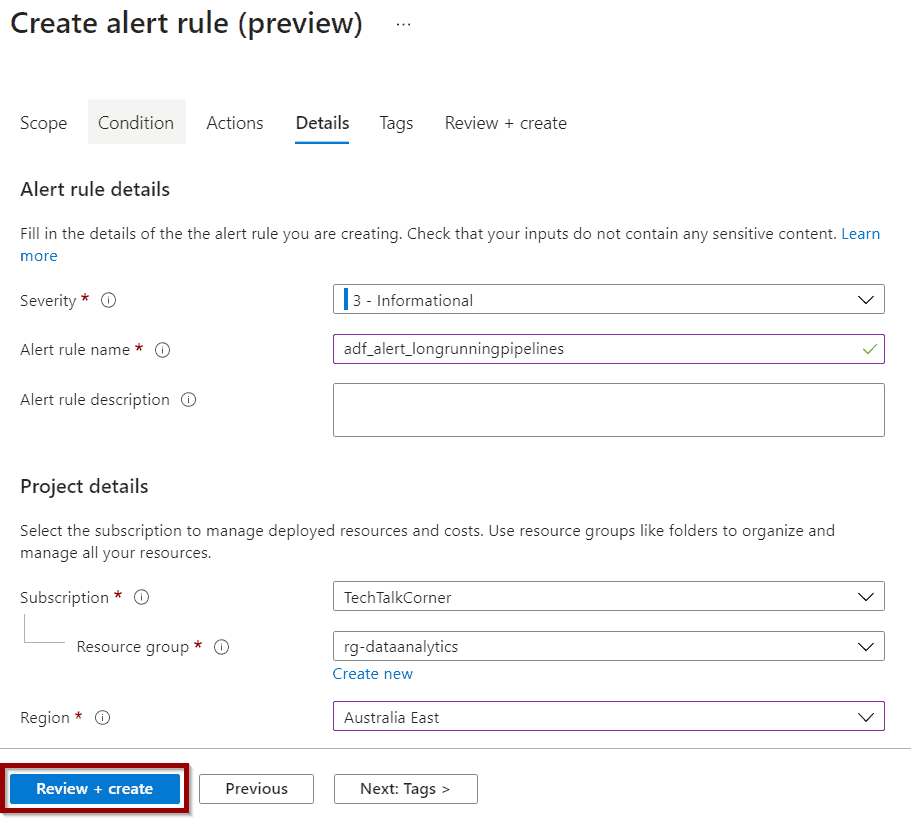

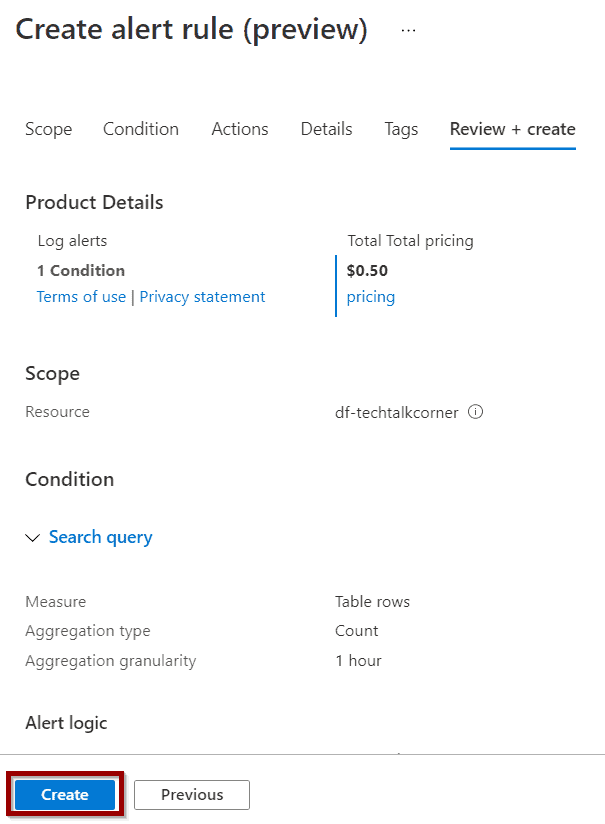

Select a name for the alert and you are ready to review and create.

On the summary page, you’ll see an estimate of the cost of the alert.

Done! Your alerts will be triggered when there are Long-Running Azure Data Factory pipelines.

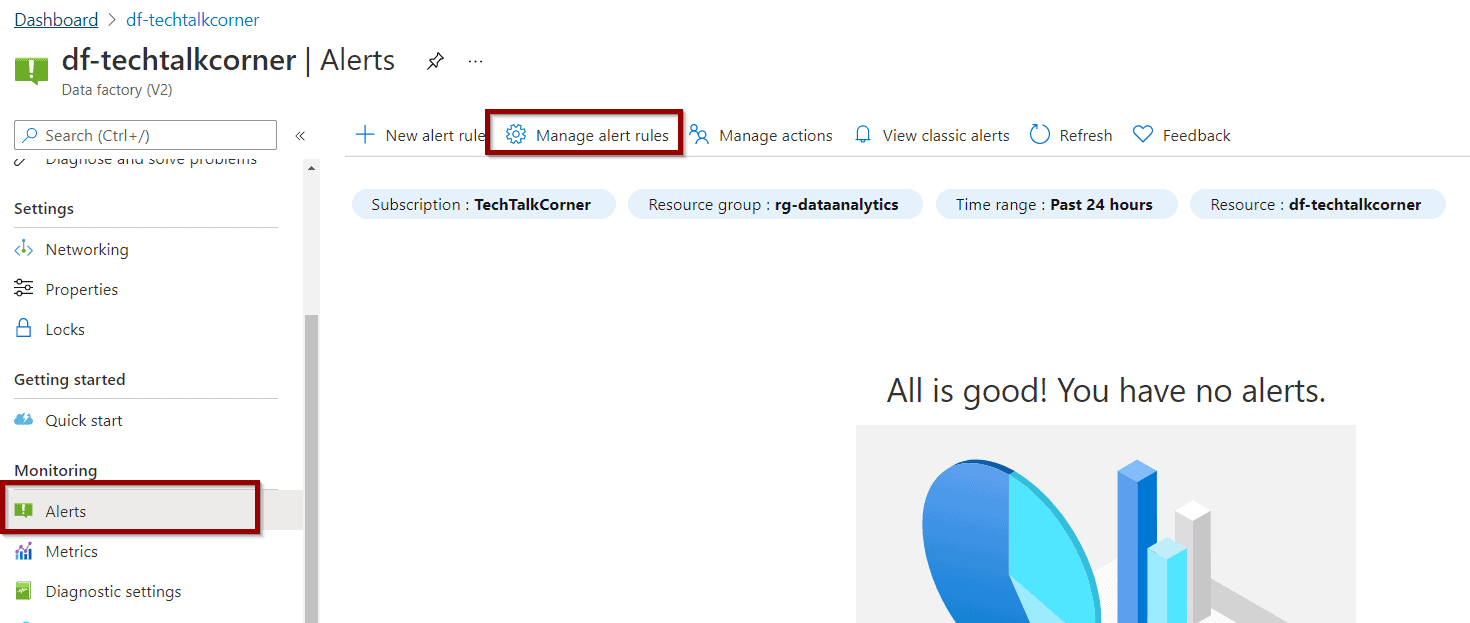

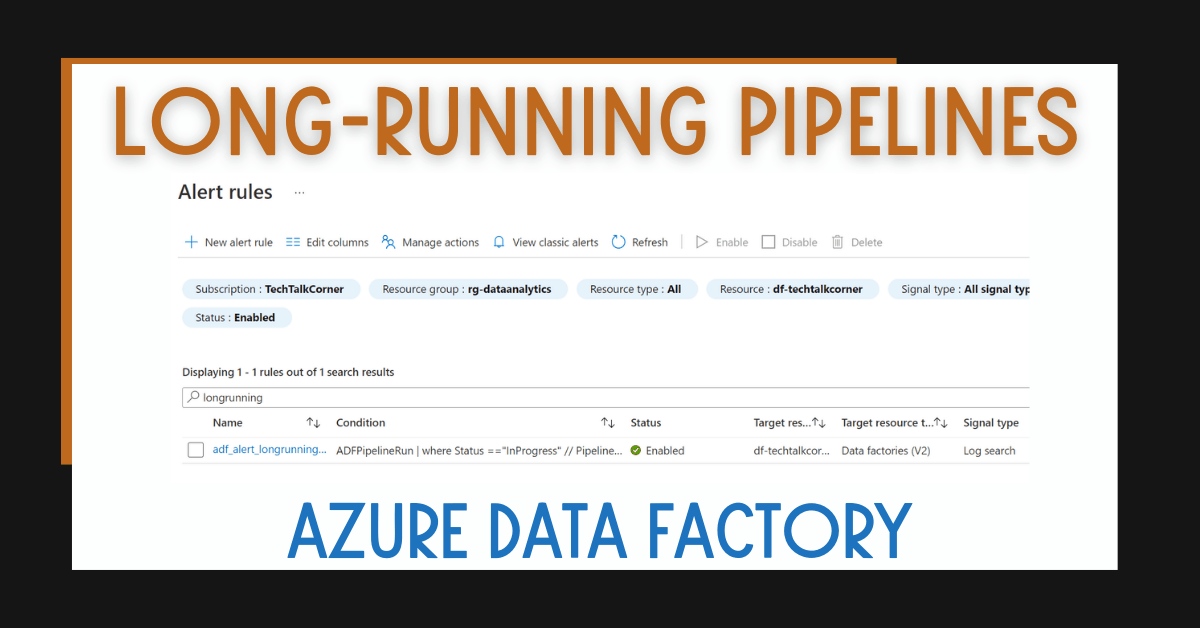

Monitor and Modify the Alert

You can modify and monitor the Long-Running Azure Data Factory pipelines alert from the alerts dashboard.

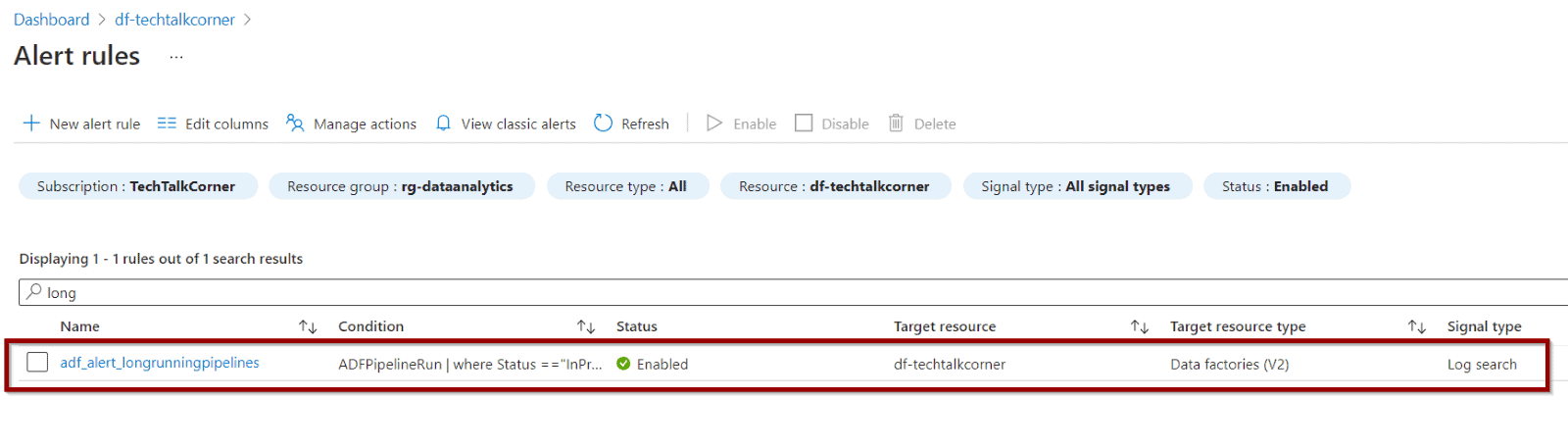

The alert will be displayed as part of the list.

Summary

To sum up, today you learned how to identify and send alerts for Long-Running Azure Data Factory pipelines. You can follow the same process for other Azure Data Factory activities.

By using this approach, you don’t need to build additional capabilities to cover this functionality.

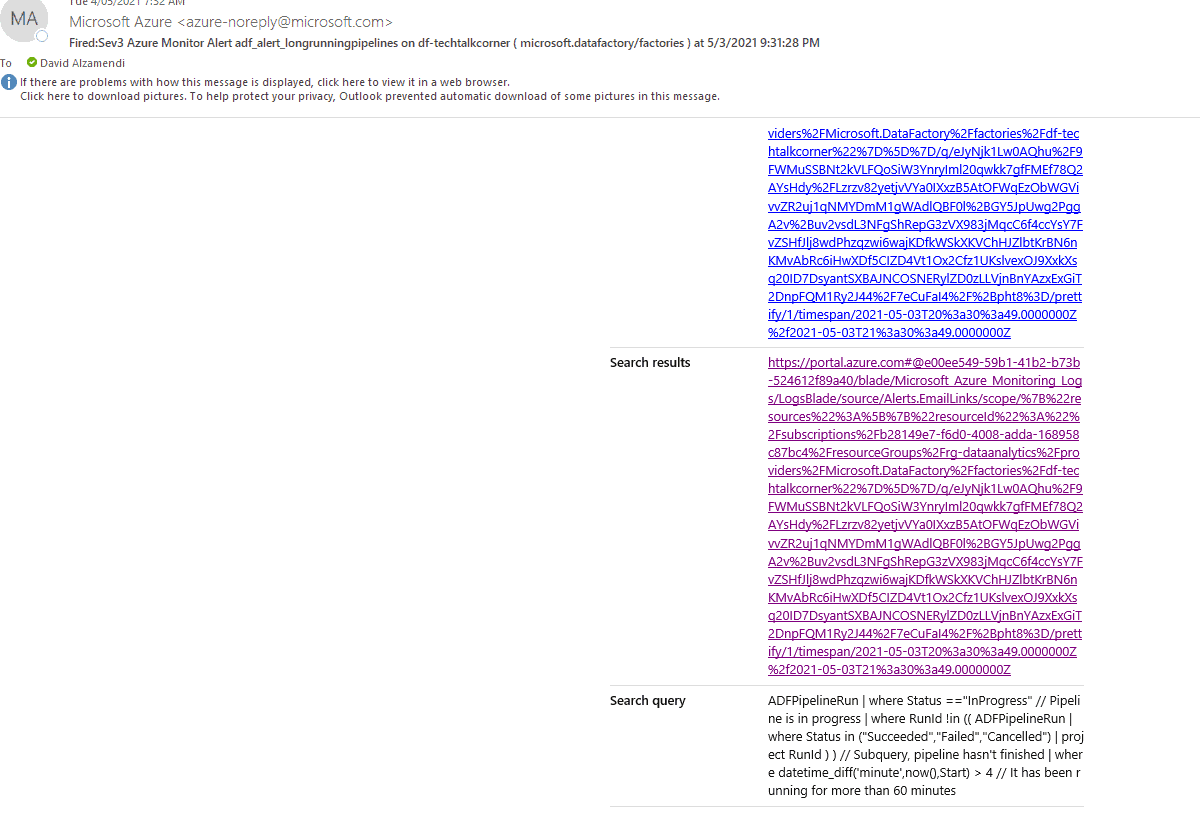

When you receive the alert, you’ll have a link that takes you to Azure Log Analytics.

What’s Next?

In upcoming blog posts, we’ll continue to explore some of the features within Azure Services.

Please follow Tech Talk Corner on Twitter for blog updates, virtual presentations, and more!

As always, please leave any comments or questions below.

1 Response

kevin

25 . 10 . 2021Hola,

Se puede automatizar la cancelación de la ejecución de un pipeline que lleve corriendo mas de x horas?