Are you looking to easily refresh your Azure Analysis Service models and partitions from Azure Data Factory? In this blog post, I show how easy it is to include this feature in Azure Data Factory.

This tutorial will help you build a pipeline that allows you to asynchronously refresh any Azure Analysis Services model using parameters. Once you finish this tutorial, you’ll have a pipeline that you can use and extend for more specific needs.

Table of Contents

Asynchronous execution vs synchronous execution

- Asynchronous execution – when you trigger a refresh of a model, but you don’t know the final status of that refresh. You get the response of the REST API call, but not the final status of the refresh. Most REST APIs work under this method.

- Synchronous execution – when you trigger a refresh, the response will not come back until the execution finishes, so you know the status of the execution.

Can you build workaround in Azure Data Factory? Yes, I will cover this in an upcoming post, but let’s build something reusable first.

In addition, you need to consider that a synchronous execution means that you need to pay more for your Azure Data Factory pipelines.

Giving Azure Data Factory access to Azure Analysis Services

Firstly, you need to give Azure Data Factory access to your Azure Analysis Services model to perform these operations using managed service identities.

There isn’t an easy way to do this from the Azure portal without getting confused. So, let’s use PowerShell.

Azure Data Factory has a managed identity created in the backend that you can use to access Analysis Services.

You need to get your App ID using Azure Active Directory (Option A) or with the PowerShell script provided below (Option B).

If you are trying to refresh from Azure Synapse Analytics, use the Azure Active Directory Method.

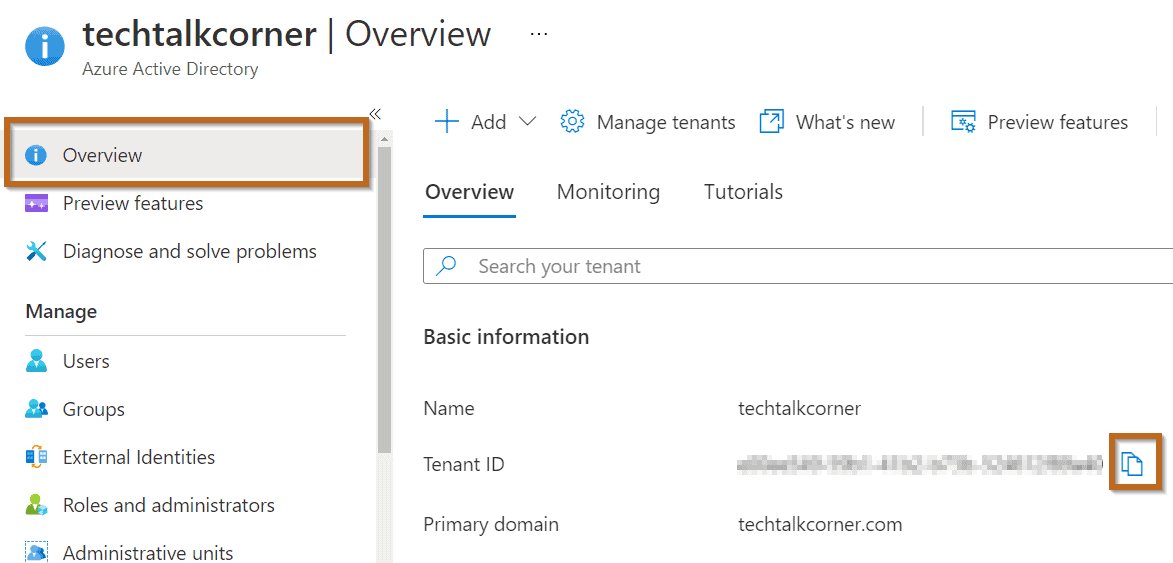

Pre-requisite Option A: Get Managed Identity Id from Active Directory

Copy the Tenant ID.

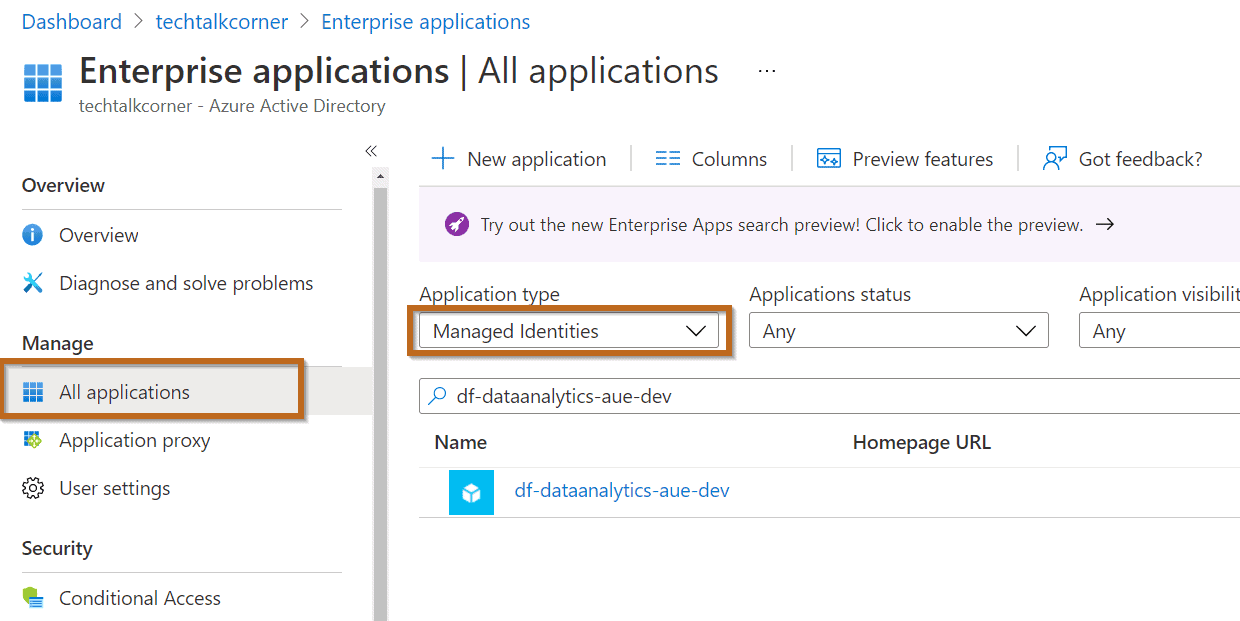

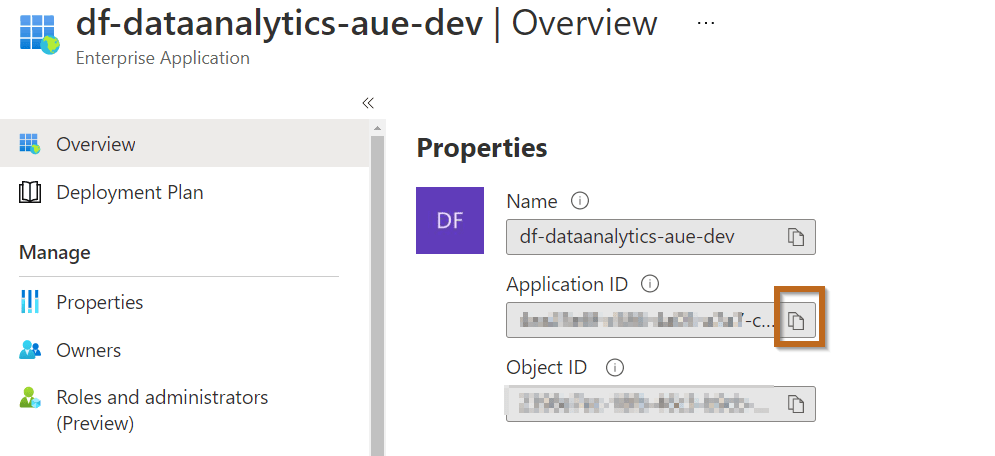

Find your Azure Data Factory or Synapse Analytics:

Copy your App ID.

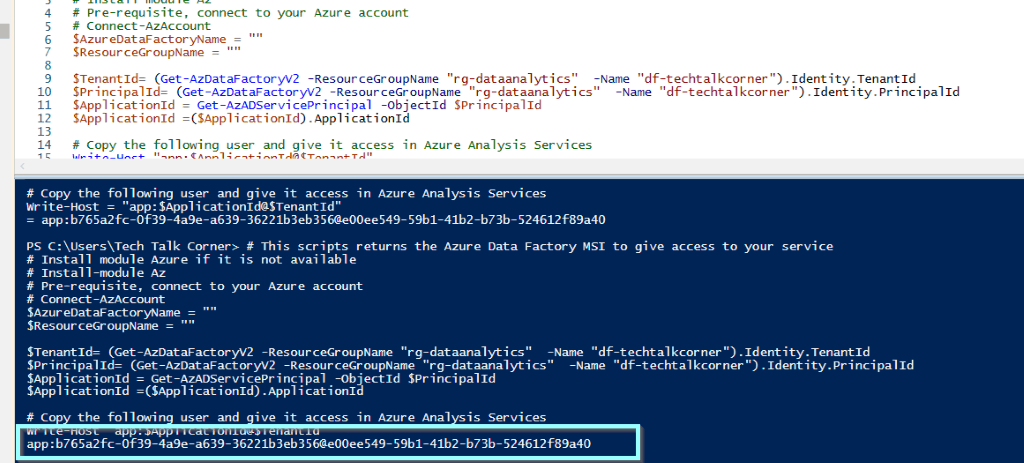

Pre-requisite B: Get Managed Identity Id with script (only Azure Data Factory)

Install-module Az

Import-Module Az.DataFactory

In some scenarios, you will need to change the policy:

Set-ExecutionPolicy -ExecutionPolicy Unrestricted

Execute this command in Power Shell and copy the output, download a copy of the script from here.`

# This script returns the Azure Data Factory MSI to give access to your service

# Install module Azure if it is not available

# Install-module Az

# Import-Module Az.DataFactory

# Pre-requisite, connect to your Azure account

# Connect-AzAccount

$AzureDataFactoryName = ""

$ResourceGroupName = ""

$TenantId= (Get-AzDataFactoryV2 -ResourceGroupName "rg-dataanalytics" -Name "df-techtalkcorner").Identity.TenantId

$PrincipalId= (Get-AzDataFactoryV2 -ResourceGroupName "rg-dataanalytics" -Name "df-techtalkcorner").Identity.PrincipalId

$ApplicationId = Get-AzADServicePrincipal -ObjectId $PrincipalId

$ApplicationId =($ApplicationId).ApplicationId

# Copy the following user and give it access in Azure Analysis Services

Write-Host "app:$ApplicationId@$TenantId" # This scripts returns the Azure Data Factory MSI to give access to your service

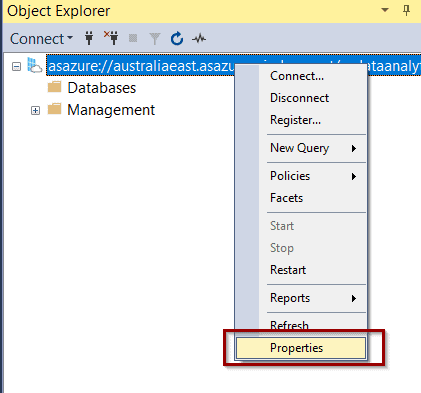

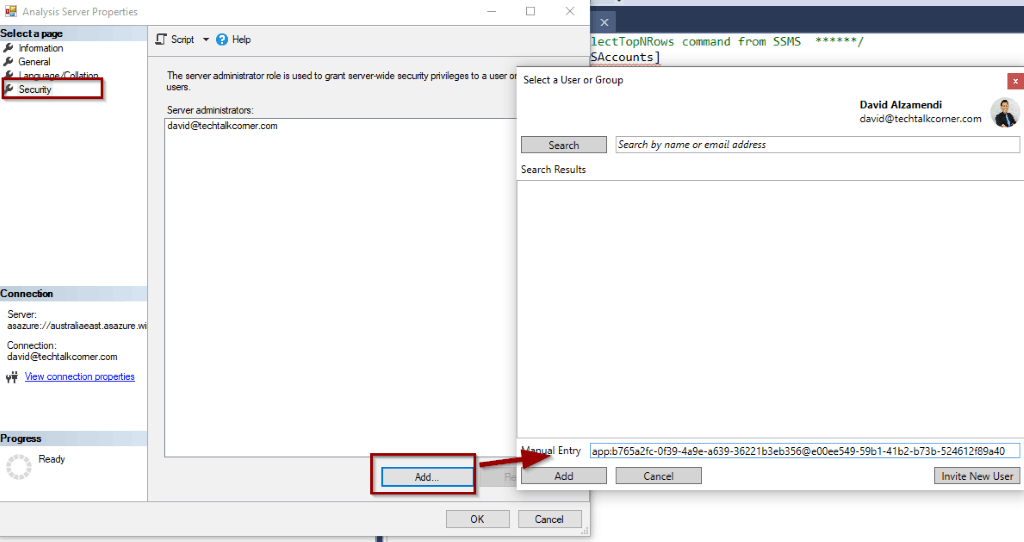

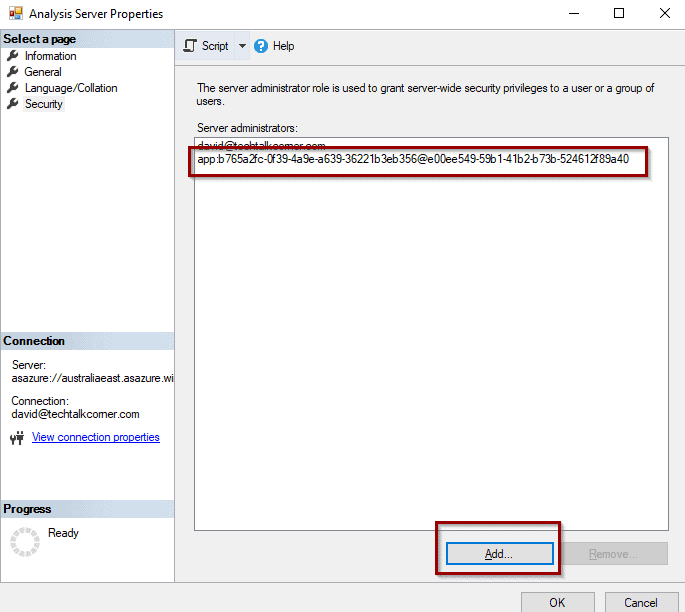

Grant Azure Data Factory Access

Then, you need to give Azure Data Factory access to Analysis Services.

Go to security and click “add.”

Make sure you include “app:” at the beginning.

Finally, don’t forget to save it.

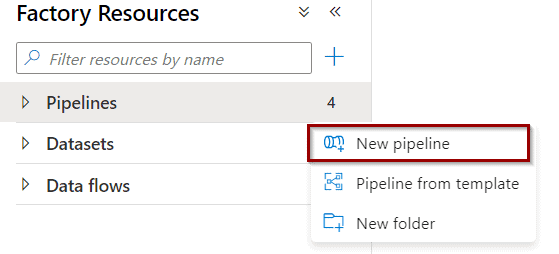

Creating the reusable pipeline

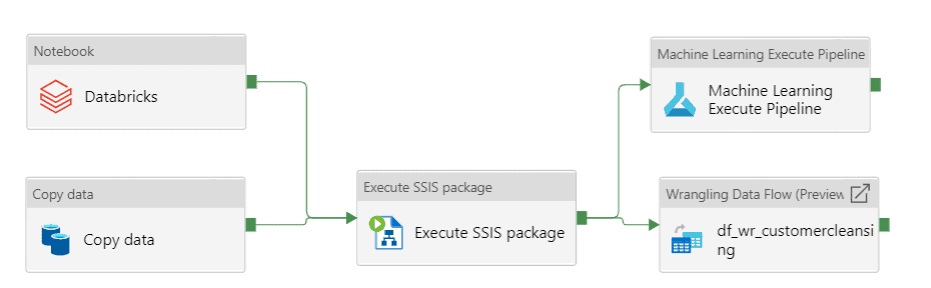

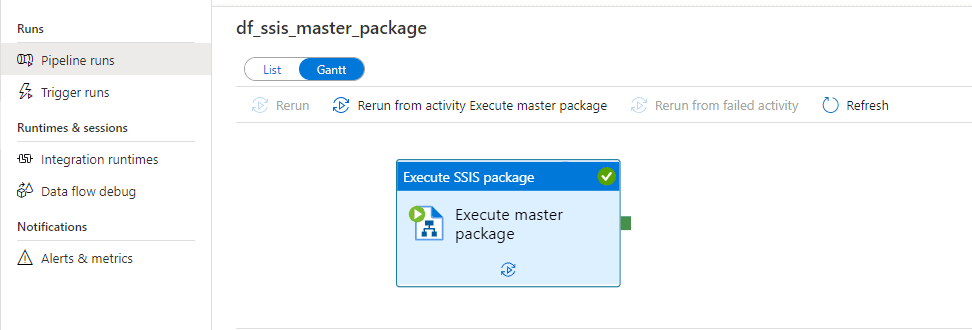

Azure Data Factory can refresh Azure Analysis Services tabular models, so let’s create a pipeline. You can download a copy of the pipeline from here.

The solution also works if the model has spaces in the name.

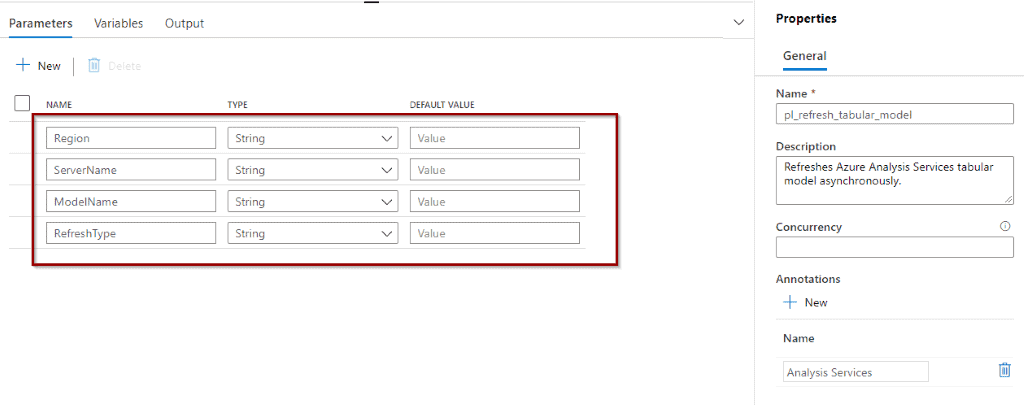

First, create the required parameters to make the pipeline reusable across different models. Don’t forget to define a generic name for your pipeline.

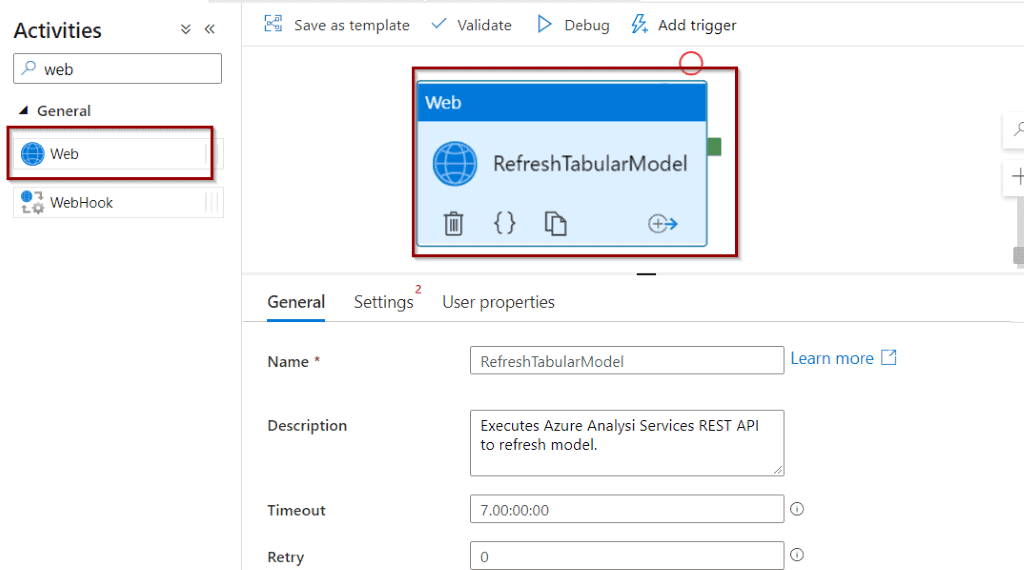

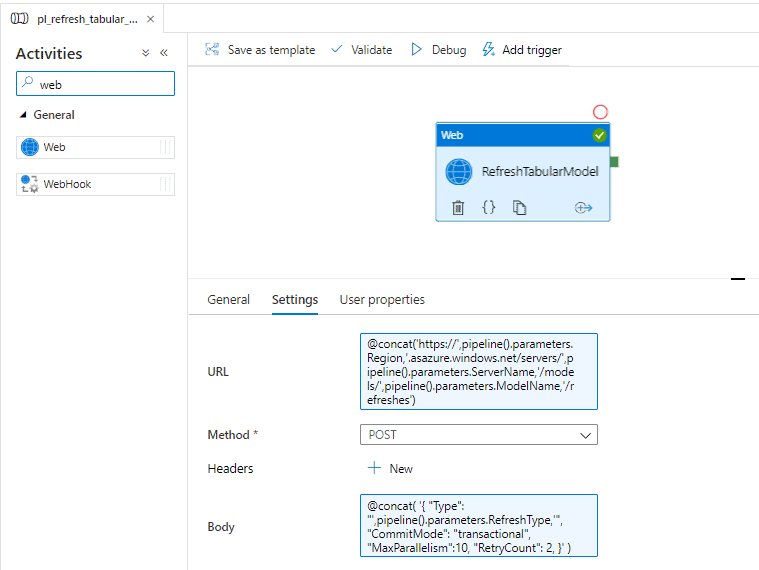

Drag and drop a Web activity in the pipeline. To refresh the model, I use Azure Analysis Services REST APIs.

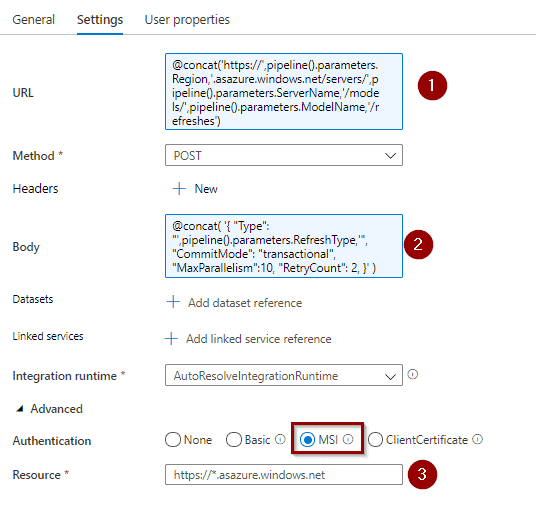

Configure the Web Activity as follows. You want to use the parameters that we have previously created. Copy the strings.

- REST API endpoint

@concat('https://',pipeline().parameters.Region,'.asazure.windows.net/servers/',pipeline().parameters.ServerName,'/models/',pipeline().parameters.ModelName,'/refreshes') - HTTP Body

@concat(

'{

"Type": "',pipeline().parameters.RefreshType,'",

"CommitMode": "transactional",

"MaxParallelism":10,

"RetryCount": 2,

}'

) - https://*.asazure.windows.net

You can see in the picture above that we are using managed identities (MSI) to access Azure Analysis Services Rest API.

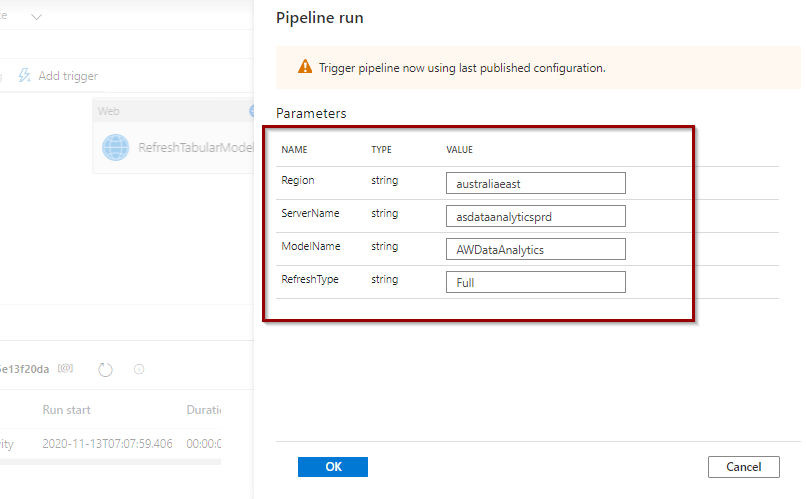

Now let’s test it. Include the correct values for the parameters.

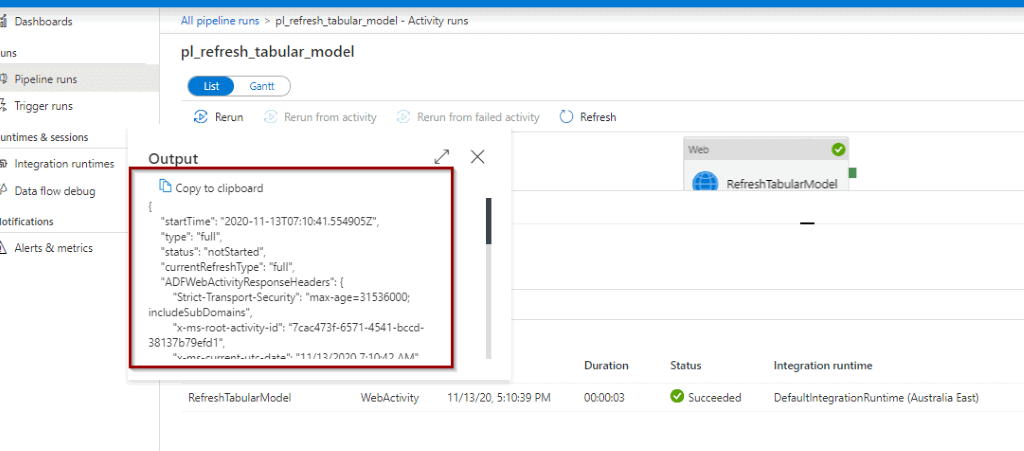

The output should tell you if it was able to connect and trigger the refresh. Remember, this is an asynchronous execution so you won’t know the status of the refresh execution.

Summary

You have created a reusable Azure Data Factory pipeline that you can use to refresh Azure Analysis Services models asynchronously. It’s easy to extend it with new futures.

What’s Next?

In upcoming blog posts, we’ll continue to explore Azure Data Services features.

Please follow Tech Talk Corner on Twitter for blog updates, virtual presentations, and more!

13 Responses

zahid hossain

31 . 05 . 2021Where should I execute the ps1 script? In my desktop?

David Alzamendi

27 . 06 . 2021Hi Zahid,

Yes, you can execute it in your desktop, it just creates the MSI that you need in Azure Active Directory.

Regards,

David

Tirta

26 . 07 . 2021Hi David, can we also connect from Synapse Data Factory?

David Alzamendi

15 . 08 . 2021Hi Tirta,

This will work with Synapse Analytics, get the application Id from Azure Active Directory as the script is only for Azure Data Factory.

Regards,

David

Colin

02 . 08 . 2021how do we pass in a model name in the data factory parameter where the model name contains a space?

David Alzamendi

15 . 08 . 2021Hi Colin,

I have just tested the solution with spaces in the model name and it seems to work as well.

Let me know if you still have problems.

Regards,

David

Ravi Shukla

20 . 08 . 2021Hello Team ,

I am using REST API method for processing the data on azure analysis cube that is working fine .

I need to create new partition for each month and process only particular month partition month only .

i.e. we are running on AUG 2021 hence we need to create the partition as TableName_Aug2021 and query will come as “select * from table name where date between ‘1-aug-2021’ and ’31-aug2021′”.

once this partition gets created we need to process only this TableName_Aug2021 this partition using REST API method .

Please let me know if you need any information .

Mitch

18 . 09 . 2021I would create an azure function that uses the TOM to create the partitions, then pass the current partition name you want processed to this solution.

Govardhan

08 . 09 . 2021Hello David – I followed the instructions and used Service Principal instead of MSI for Authentication. When I run the pipeline manually, it refreshed the AAS database with the new data. However, sometimes I do see that the same ADF pipeline ran successfully, but the data is not refreshed as indicated by the LastRefreshDate for the AAS database in SSMS. I do not know why this is happening. Is it because the OAuth2 token expires every 1 hour? Please advise.

Thanks

Hele

13 . 09 . 2021Did you publish the workaround for synchronous execution yet?

David Alzamendi

17 . 09 . 2021Hi Hele,

I don’t have that blog post yet, but I added this idea to my to-do list.

Regards,

David

RJust

07 . 10 . 2021Hello – this was very helpful for a beginner like myself in the world of Azure. I was able to get this working to refresh a single AAS but is it possible to refresh all models in a server or refresh specific models without creating a new pipeline for each one?

Singgih

21 . 05 . 2024I have tried this but results in error Unauthorized.

Did I miss something?