In this blog post, I’ll show you a design pattern to export parquet files with column names with spaces. You can use Azure Data Factory or Azure Synapse Analytics to overcome this problem. I will use dynamic T-SQL

Table of Contents

Introduction

To begin, one of the limitations when exporting data to parquet files in Azure Synapse Analytics or Azure Data Factory is you can’t export tables that have columns with blank spaces in their names.

One of my readers, Marcus, asked me about how to do this recently, so I thought I’d write about it. (Leave me a comment if you ever have any questions, and your answer may turn into a helpful blog post!)

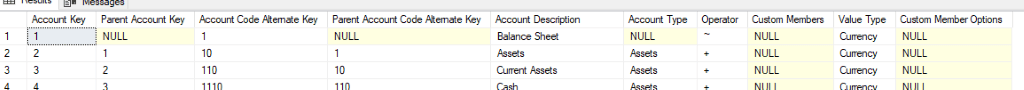

For example, the following table cannot be exported with column header because the names have spaces.

The solution is to rename the column names when querying the data while minimizing the development effort.

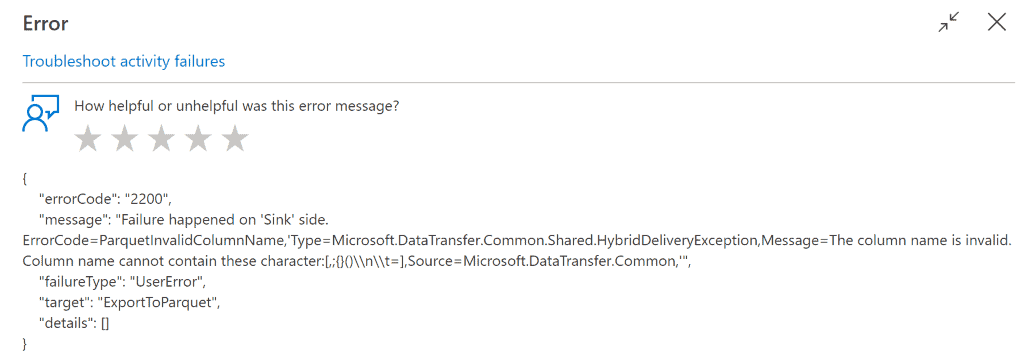

If you try to do it, you get the following error:

"message": "Failure happened on 'Sink' side. ErrorCode=ParquetInvalidColumnName,'Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=The column name is invalid. Column name cannot contain these character:[,;{}()\\n\\t=],Source=Microsoft.DataTransfer.Common,'",

Pre-requisites (recommended)

First, have a look at this blog post on how to export parquet files in Azure to understand the solution.

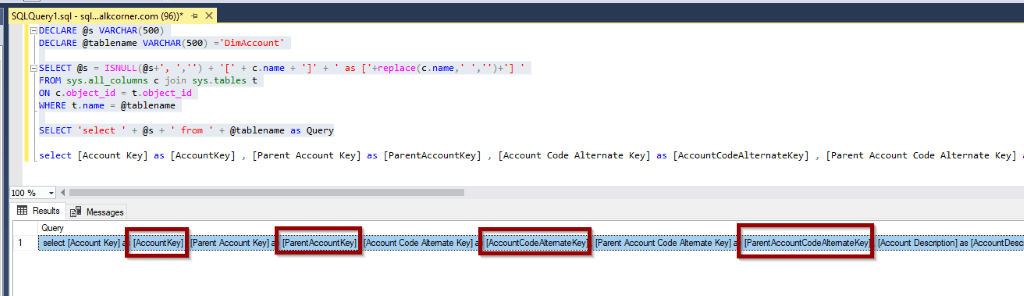

Prepare the Dynamic T-SQL Code

In this example, I’ll use Azure SQL Database as the source system. If you are using any Relational Database Management System (RDBMS), the design pattern will be the same. Just change the dynamic queries.

The main idea is to generate a dynamic query that modifies the column names to remove the spaces. If you haven’t worked with Dynamic SQL before, I suggest learning about it. I learned this in my early years from my mentor Enrique Viamontes.

In T-SQL, you can use:

DECLARE @s VARCHAR(MAX)

DECLARE @tablename VARCHAR(500) ='DimAccount'

SELECT @s = ISNULL(@s+', ','') + '[' + c.name + ']' + ' as ['+replace(c.name,' ','')+'] '

FROM sys.all_columns c join sys.tables t

ON c.object_id = t.object_id

WHERE t.name = @tablename

SELECT 'select ' + @s + ' from ' + @tablename as Query

Export Parquet Files with Column Names with Spaces

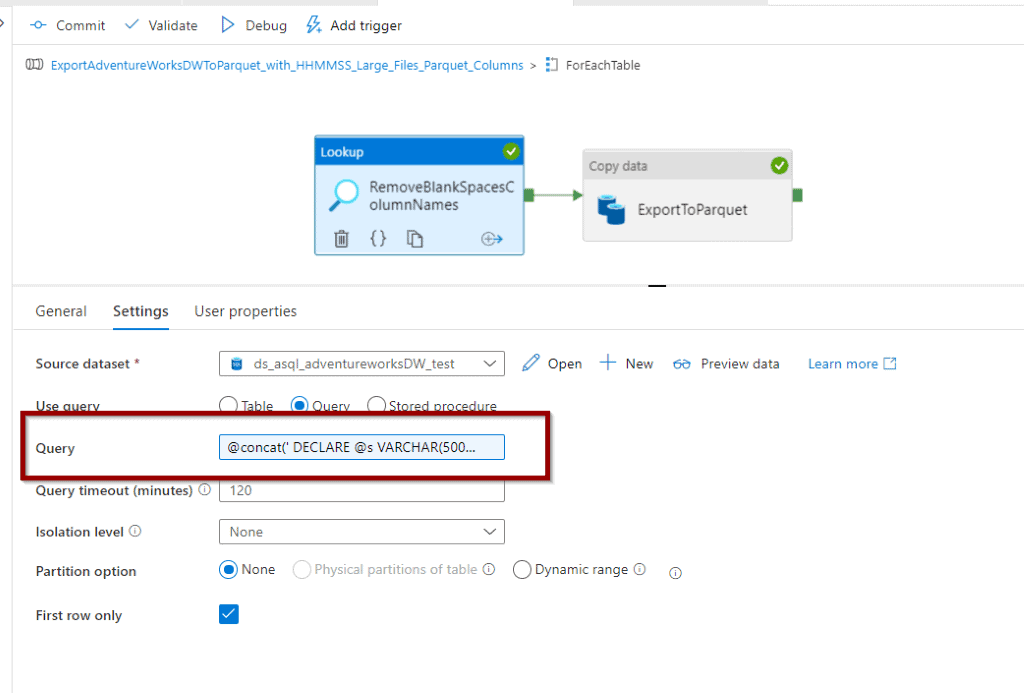

Now, let’s include the code in an integration pipeline (Azure Data Factory or Synapse Analytics) using a Lookup Activity.

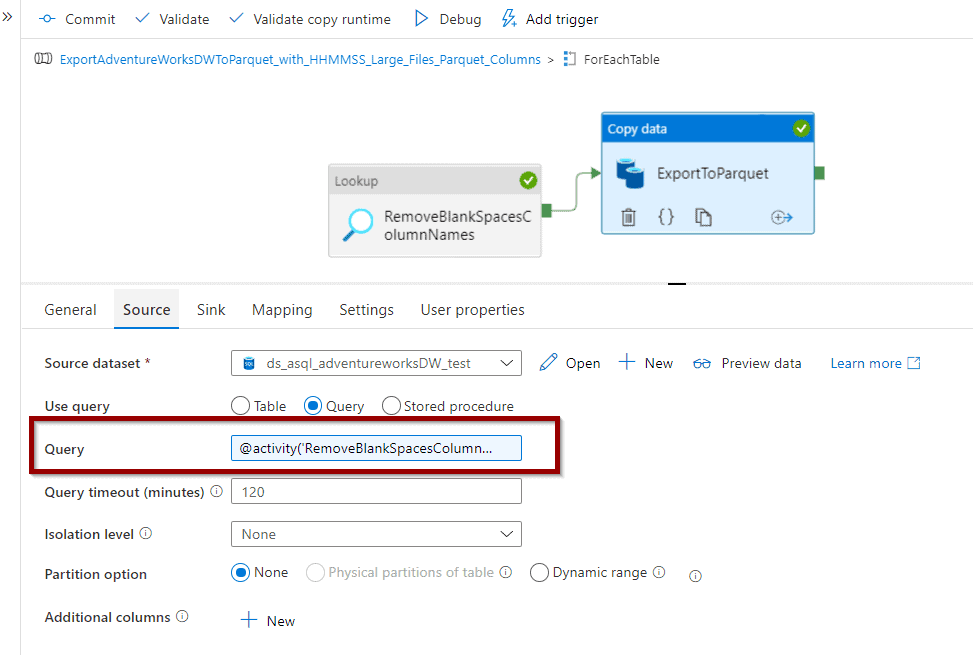

In your ForEachTable, add a lookup activity as follows and click the query.

Paste the following query:

@concat('

DECLARE @s VARCHAR(500)

DECLARE @tablename VARCHAR(500) = ''', item().Table_Name ,'''

SELECT @s = ISNULL(@s + '', '','''') + ''['' + c.name + '']'' + '' as [''+replace(c.name,'' '','''')+''] ''

FROM sys.all_columns c join sys.tables t

ON c.object_id = t.object_id

WHERE t.name = @tablename

SELECT ''select '' + @s + '' from '' + @tablename as Query') Now, modify the copy activity source query.

Paste the following code.

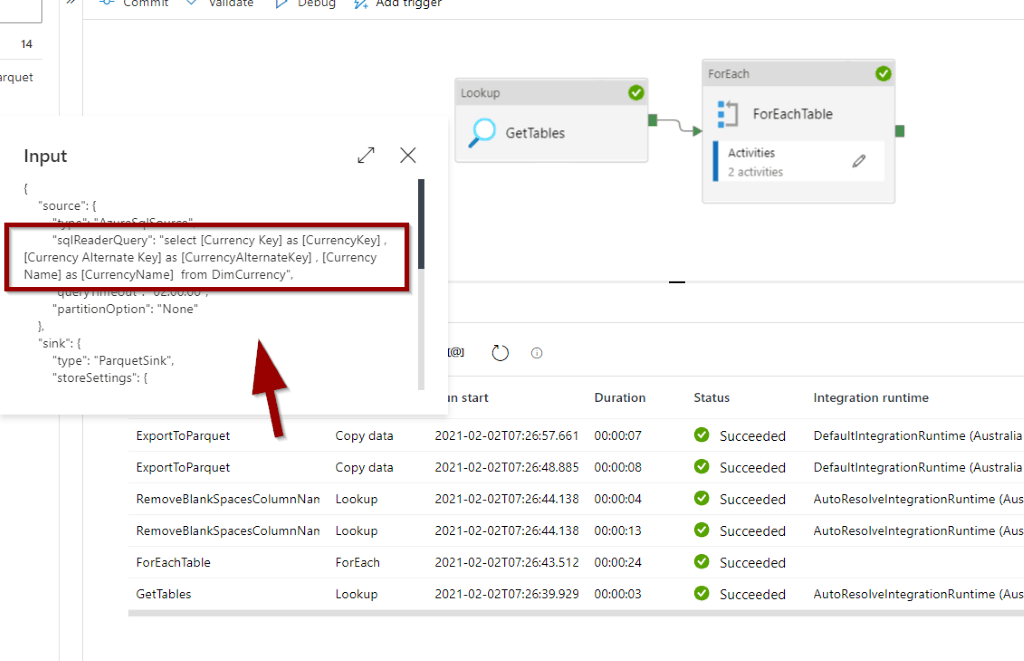

@activity('RemoveBlankSpacesColumnNames').output.firstRow.Query Now, the copy activity will export the data correctly by renaming the column names.

Summary

Today you have learned how to overcome one of the limitations when working with parquet files.

What’s Next?

In upcoming blog posts, we’ll continue to explore Azure Data Service features.

Please follow Tech Talk Corner on Twitter for blog updates, virtual presentations, and more!

12 Responses

Kasper Lindblad Nielsen

09 . 05 . 2021This was extremely helpful thank you so much David! The example easily extends to other parquet special characters by just nesting the replace function. I will have to look into these dynamic queries it seems like a very powerful tool!

David Alzamendi

12 . 05 . 2021Thank you for your feedback Kasper, dynamic queries are great in combination with Azure Data Factory!

Ajay

14 . 06 . 2021Hi Kasper,

I am new to query language and ADF, Can I know how to extend to other parquet special characters like [,;{}()\n\t=] which can cause errors when converting to parquet.

David Alzamendi

27 . 06 . 2021Hi Ajay,

The query shared in this article can be modified to meet your needs. You will need to remove them. In T-SQL, you can use https://docs.microsoft.com/en-us/sql/t-sql/functions/replace-transact-sql

Example:

select replace(

replace(

replace(‘Test Column[‘ + char(13),’ ‘,”) — Space

,'[‘,”) — Bracket

,char(13),”) — Carriage return

Regards,

David

Sid

10 . 08 . 2021Hi David,

Is there a way to port this solution to MySQL source?

Regards,

Sid

prateek gandhi

24 . 11 . 2021Hi SID,

Did you get any solution for mysql source?

Regards

Prateek

Zac

23 . 02 . 2022This is excellent in theory, but when trying to implement it in practice, it doesn’t seem to work. The comma introduced in the below line ends up placing a comma at the end of the list of columns, before the from clause in the final product:

SELECT @s = ISNULL(@s + ”, ”,””) + ”[” + c.name + ”]” + ” as [”+replace(c.name,” ”,””)+”] ”

As such, the operation fails citing a syntax error near “from”, because there is a comma before it. Can you help me understand what I’m missing? Thanks!

Zac

24 . 02 . 2022Sorry, I figured it out. The value for @s in my use case needed to be longer than 500 characters, so it was truncating the column list until I expanded the length of that variable. The solution works great, thanks!

David Alzamendi

01 . 04 . 2022Thank you for the feedback Zac, I don’t even quite remember why I selected VARCHAR(500) (I guess just as an example). I changed it to MAX.

Luke

23 . 03 . 2022Hi David, Thank you for taking the time to share your solution. It is an interesting way to dynamically change the query per table in a ForEach. Very helpful. I did need to increase the size of @s due to truncation of larger views and did a simple modification to integrate using the table’s schema. Interestingly we have some curated views for tabular models that have some quoted columns with spaces that we are caching to parquet files and reintroducing the spaces back when consuming the parquet files out through serverless Synapse by leveraging the spaces in the original view’s metadata but removing spaces from the added with() block in the external view. Your solution was helpful in that. We used sys.views instead of sys.tables but I left it as sys.tables to be as close as possible to your original. Thanks again!

@concat(‘

DECLARE @s VARCHAR(MAX)

DECLARE @tablename VARCHAR(500) = ”’, item().Table_Name ,”’

DECLARE @schemaname VARCHAR(500) = ”’, item().Schema_Name ,”’

DECLARE @fulltablename VARCHAR(1000) = ”’, item().Schema_Name,’.’,item().Table_Name ,”’

SELECT @s = ISNULL(@s + ”, ”,””) + ”[” + c.name + ”]” + ” as [”+replace(c.name,” ”,””)+”] ”

FROM sys.all_columns c

join sys.tables t ON c.object_id = t.object_id

join sys.schemas s ON t.schema_id = s.schema_id

WHERE s.name = @schemaname AND t.name = @tablename

SELECT ”select ” + @s + ” from ” + @fulltablename as Query’)

David Alzamendi

01 . 04 . 2022Amazing contribution Luke! I might review the blog post and include your contribution soon!