If you are trying to pause and resume your Azure Synapse Analytics SQL Pool using Azure Data Factory pipelines or Azure Synapse pipelines, don’t look any further! I will share the solution with you.

If you are looking to do this with Azure Automation Accounts because you don’t use Azure Data Factory or Synapse Integration Pipelines, check this blog post to restart Azure Synapse Analytics SQL Pool using Automation Accounts.

The solution in this document is synchronous, so the pipeline ends when the SQL Pool finishes pausing or resuming.

Table of Contents

Why would you pause your SQL Pool?

To start, while we wait for built-in features like auto-scaling or auto-pause, you want to make sure that you are optimising resources and on-going cost in Azure.

Many businesses don’t need access to the Data Warehouse layer 24×7 and they use Power BI Power BI to display information required to make decisions.

In these scenarios, you should be pausing the Azure Synapse Analytics SQL Pool to decrease on-going costs.

While you will continue paying for storage, you will stop paying for compute power (Data Warehouse Units, AKA DWUs)

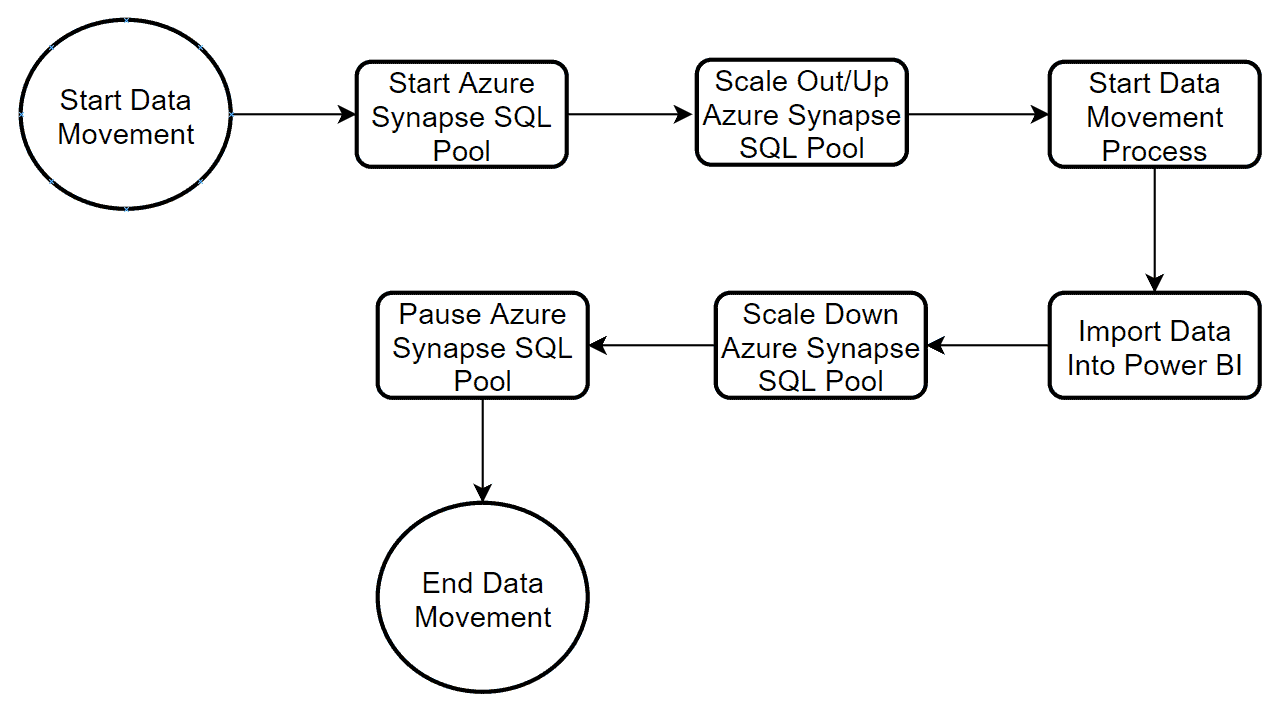

Example data movement process:

Download the solution to pause and resume Azure Synapse Analytics

If you are looking for a solution to pause and resume your Azure Synapse Analytics SQL Pool, download it from the following links:

- Download pause and resume pipeline for dedicated SQL Pool in Azure Synapse Analytics Workspaces

- Download pause and resume pipeline for dedicated SQL Pool outside Azure Synapse Analytics Workspaces (formerly known as Azure SQL Data Warehouse).

Azure Synapse Analytics SQL Pools use 2 different sets of APIs, so we have 2 solutions.

- For a dedicated SQL Pool created under the Azure Synapse Analytics Workspace experience

- For a dedicated SQL Pool created as a standalone service (formerly known as Azure SQL Data Warehouse)

Pre-requirements

Firstly, before you can use the solution, you need to give access to your Azure Data Factory service or Azure Synapse Analytics Workspace to manage the SQL Pool.

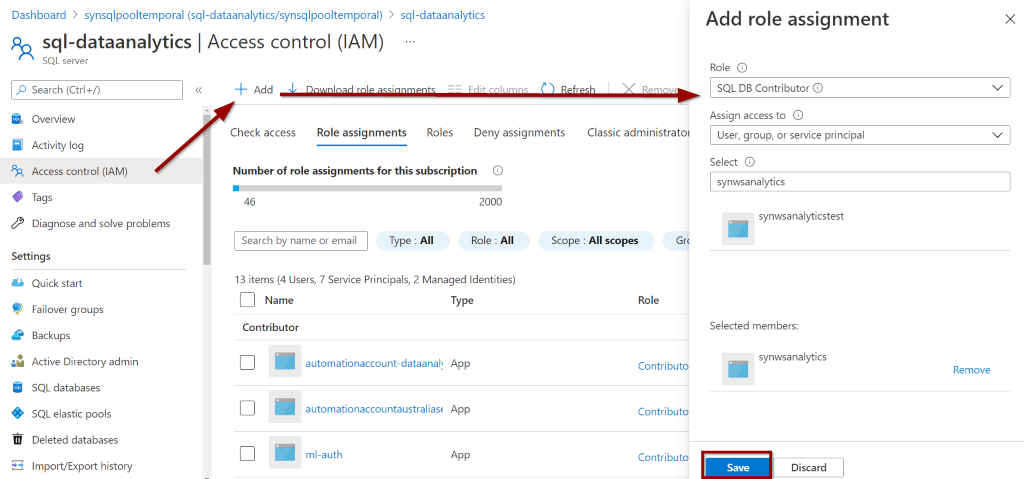

For Dedicated SQL Pool Without Azure Synapse Workspaces:

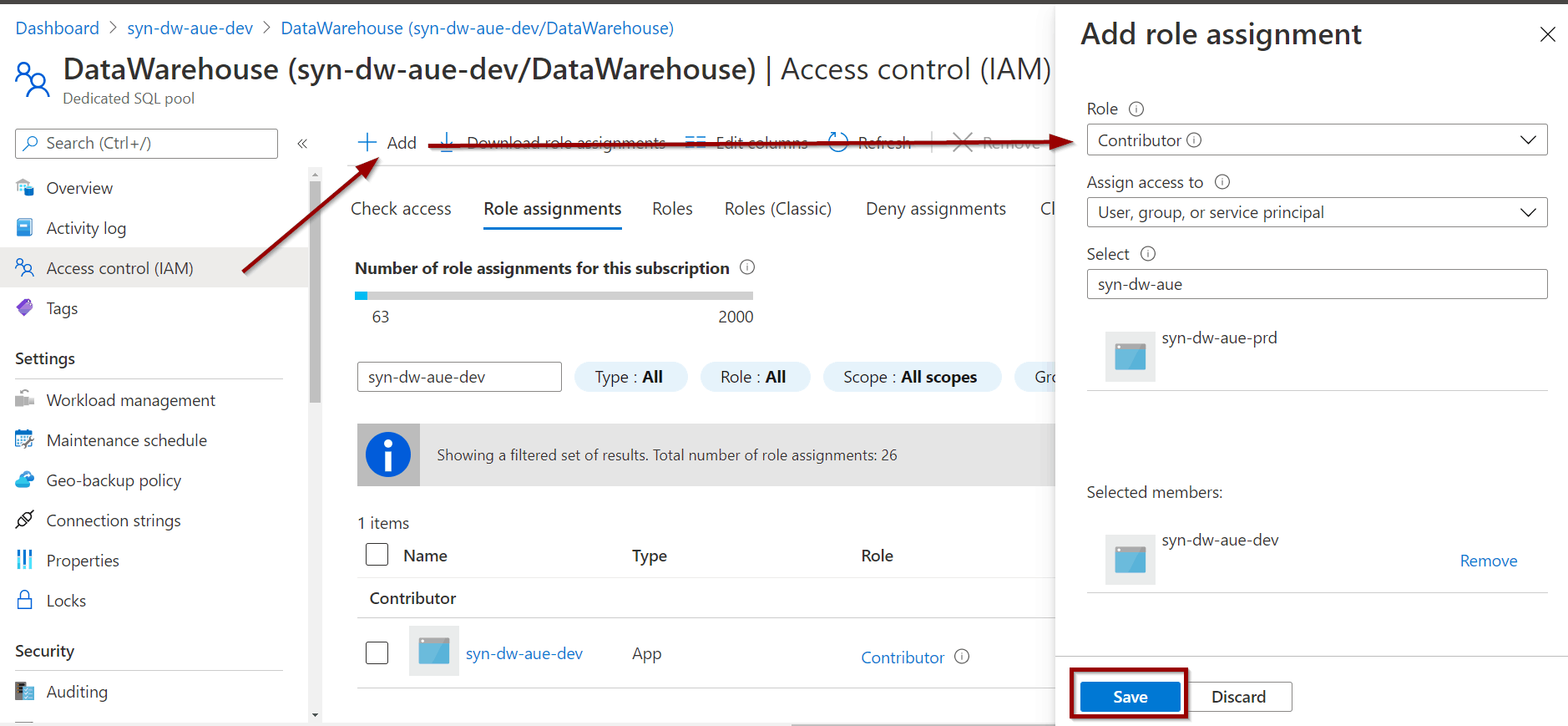

For Dedicated SQL Pool in Azure Synapse Workspaces:

You might experience the following error if your pipeline does not have access.

{ "errorCode": "2108", "message": "{\"error\":{\"code\":\"AuthorizationFailed\",\"message\":\"The client 'dd15054d-92d8-4c5c-9b67-fc08a0b75eec' with object id 'dd15054d-92d8-4c5c-9b67-fc08a0b75eec' does not have authorization to perform action 'Microsoft.Sql/servers/databases/Resume/action' over scope '/subscriptions/b28149e7-f6d0-4008-adda-168958c87bc4/resourceGroups/rg-dataanalytics/providers/Microsoft.Sql/servers/sql-dataanalytics/databases/synsqlpooltemporal' or the scope is invalid. If access was recently granted, please refresh your credentials.\"}}", "failureType": "UserError", "target": "Resume or Pause Azure Synapse Analytics", "details": [] } Import Solutions

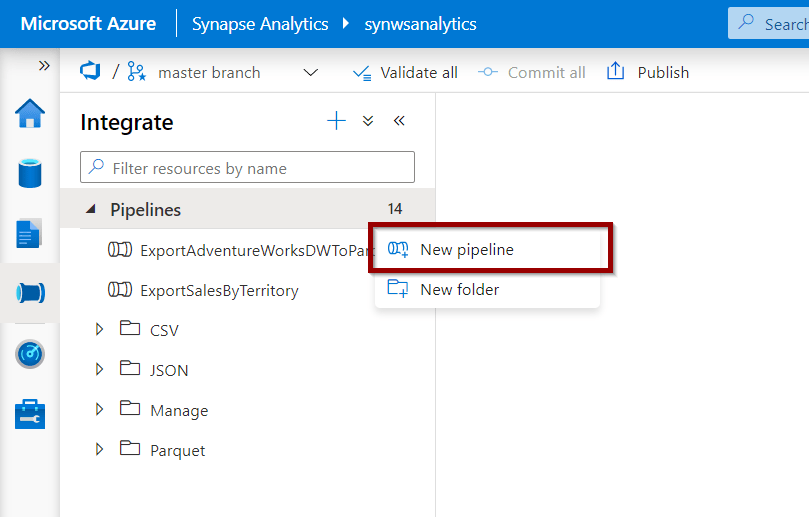

First, create a new pipeline.

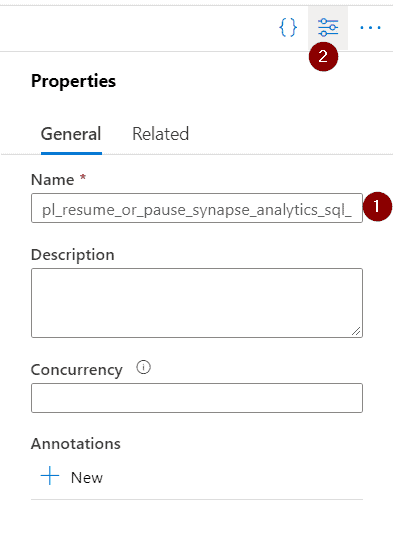

Rename the pipeline (1) “pl_resume_or_pause_synapse_analytics_sql_pool” and click the JSON editor (2).

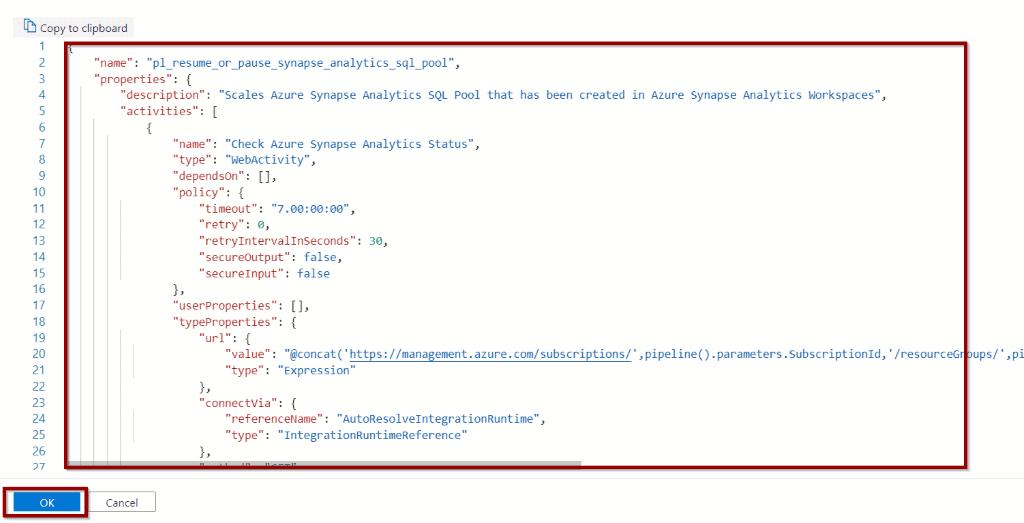

Paste the definition of the pipeline and click ok.

The pipeline has been imported, you can save and use it.

Trigger pipeline to pause and resume your Azure Synapse Analytics

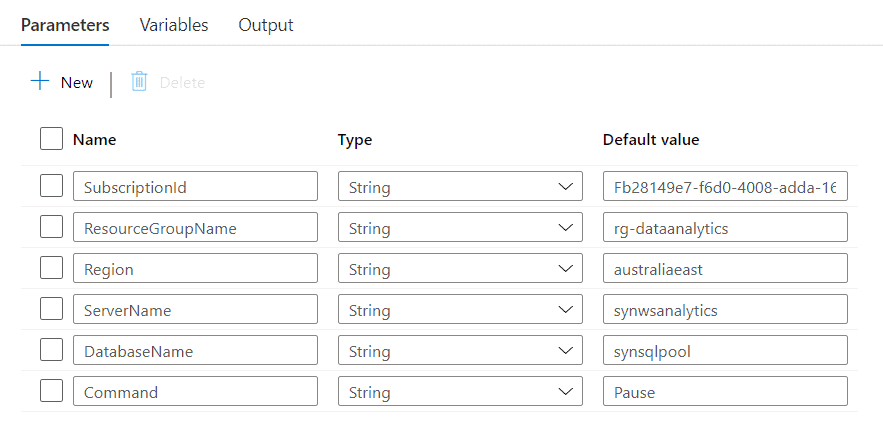

To use the pipeline, there are different parameters that you need to pass:

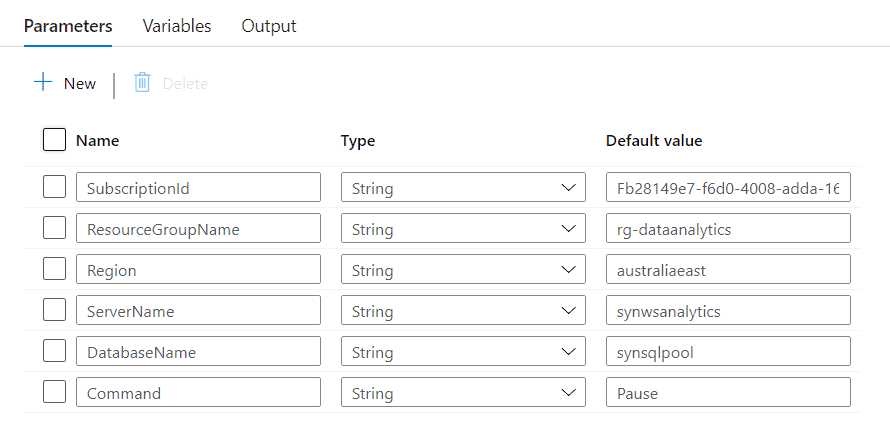

- SubscriptionId, format XXXXXX-XXXXXX-XXXXXXX-XXXXXX

- ResourceGroupName

- Region, for example australiaeast

- ServerName

- DatabaseName (SQL Pool name)

- Command (Pause or Resume)

These parameters help you create the API request to pause or resume your Azure Synapse dedicated SQL Pool.

SubscriptionId is a great candidate to use global parameters. Check out my blog post on Azure Data Factory Global Parameters.

Create a pipeline to pause and resume Azure Synapse Analytics

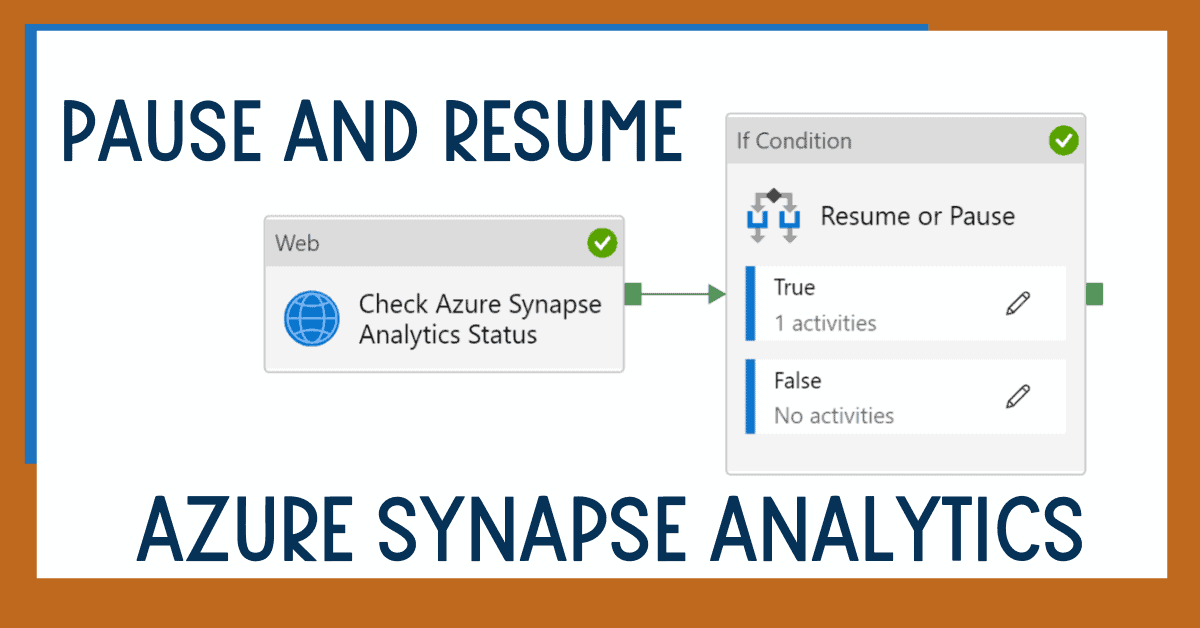

To create the pipeline, you need the following components:

- Add pipeline parameters required for execution

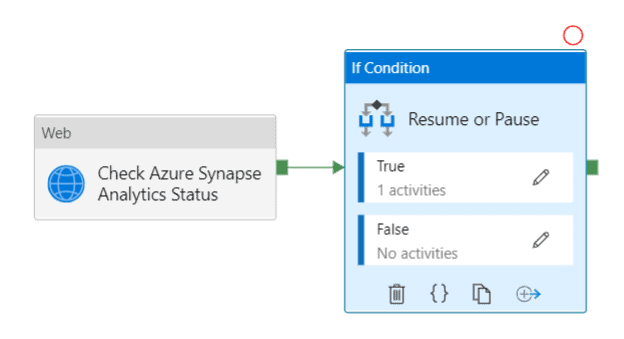

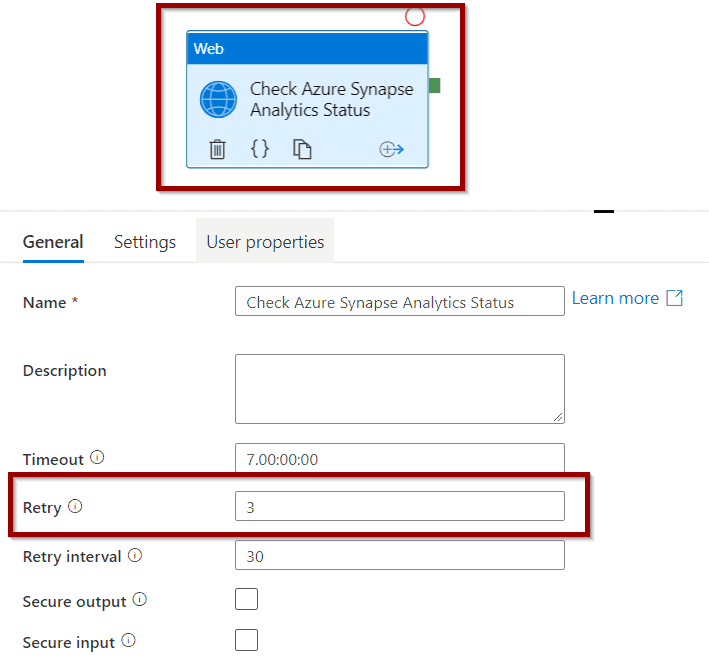

- A Web activity to get the status of your the SQL Pool

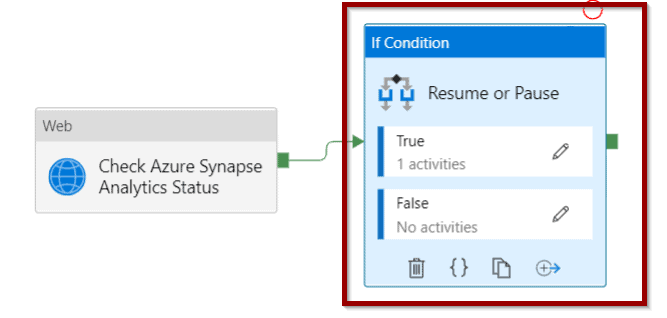

- An If Condition activity to make sure the status of your SQL Pool can be paused or resumed

- A Web activity (inside the If Condition) to Start or Resume your SQL Pool. This takes advantage of the parameter “Command”

Let’s start by adding the parameters to our pipeline.

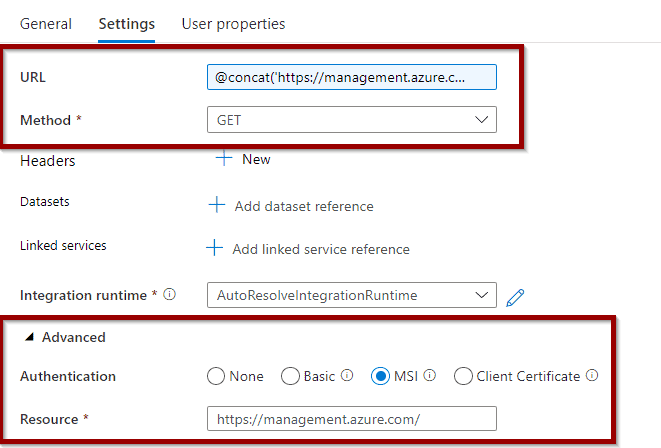

Add the Web Activity, and modify the request to be GET. Change the Retry options if you’d like to increase them.

Go to the settings sections and define Method to GET and Authentication to MSI.

For an Azure Synapse Analytics Workspace Dedicated SQL Pool, copy the following command.

@concat('https://management.azure.com/subscriptions/',pipeline().parameters.SubscriptionId,'/resourceGroups/',pipeline().parameters.ResourceGroupName,'/providers/Microsoft.Synapse/workspaces/',pipeline().parameters.ServerName,'/sqlPools/',pipeline().parameters.DatabaseName,'?api-version=2019-06-01-preview') For a dedicated SQL Pool, outside Synapse (Azure SQL Data Warehouse), copy the following command.

@concat('https://management.azure.com/subscriptions/',pipeline().parameters.SubscriptionId,'/resourceGroups/',pipeline().parameters.ResourceGroupName,'/providers/Microsoft.Sql/servers/',pipeline().parameters.ServerName,'/databases/',pipeline().parameters.DatabaseName,'?api-version=2014-04-01-preview')

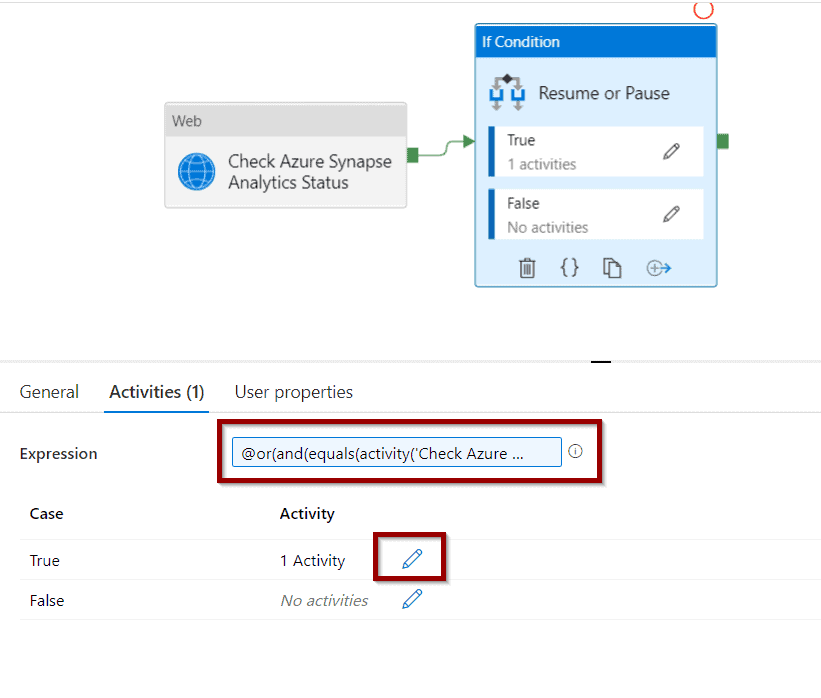

Then, add an If Condition activity to start or pause the Azure Synapse Analytics SQL Pool.

For the If Condition activity expression, copy the following command. It will:

- Resume the Azure Synapse SQL Pool only if the status is Paused

- Pause the Azure Synapse SQL Pool if the status is Online

@or(and(equals(activity('Check Azure Synapse Analytics Status').output.properties.status,'Online'), equals(pipeline().parameters.Command,'Pause')),and(equals(activity('Check Azure Synapse Analytics Status').output.properties.status,'Paused'), equals(pipeline().parameters.Command,'Resume')))

Click the Activity editor.

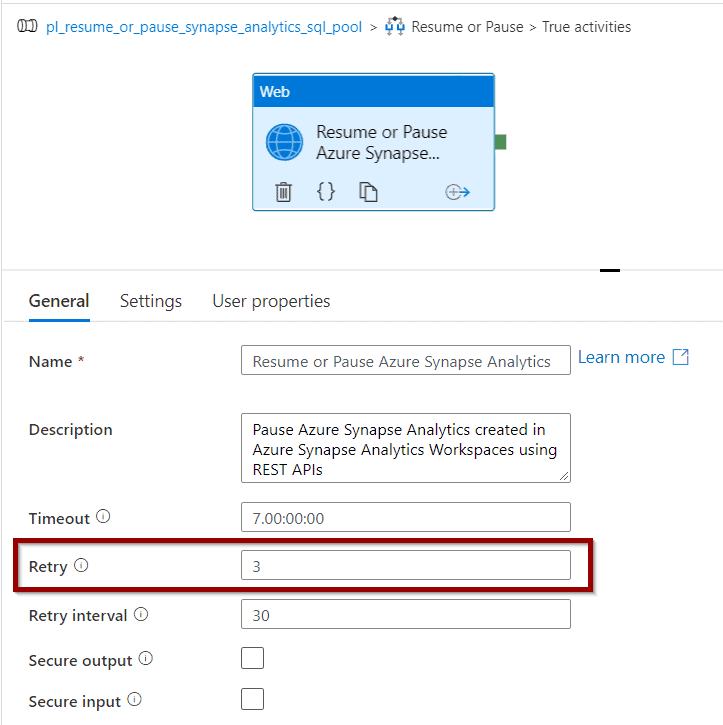

Inside the If Activity, include another Web Activity. This activity will execute the POST request and pause or resume your Azure Synapse Analytics SQL Pool.

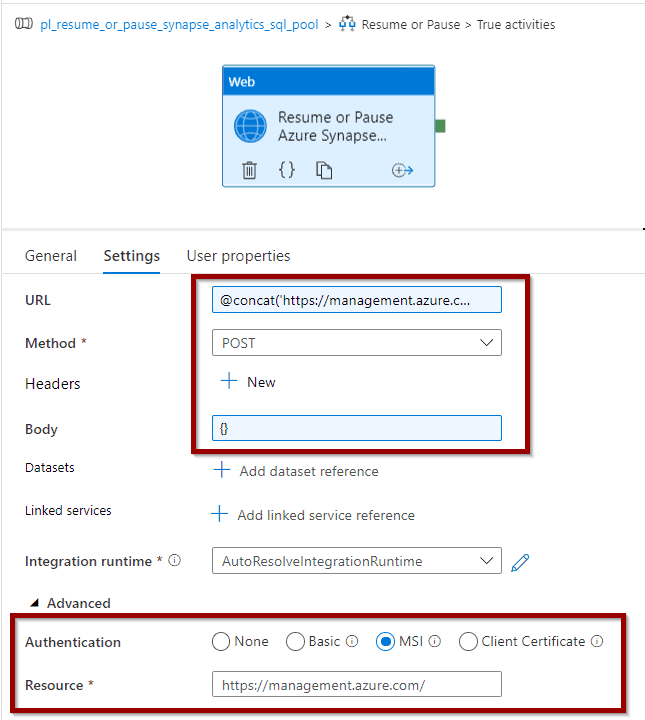

Configure the activity to POST and copy the following command.

For an Azure Synapse Analytics Workspace SQL Pool, copy the following command.

@concat('https://management.azure.com/subscriptions/',pipeline().parameters.SubscriptionId,'/resourceGroups/',pipeline().parameters.ResourceGroupName,'/providers/Microsoft.Synapse/workspaces/',pipeline().parameters.ServerName,'/sqlPools/',pipeline().parameters.DatabaseName,'/',pipeline().parameters.Command,'?api-version=2019-06-01-preview') For a dedicated SQL Pool, outside Synapse (Azure SQL Data Warehouse), copy the following command.

@concat('https://management.azure.com/subscriptions/',pipeline().parameters.SubscriptionId,'/resourceGroups/',pipeline().parameters.ResourceGroupName,'/providers/Microsoft.Sql/servers/',pipeline().parameters.ServerName,'/databases/',pipeline().parameters.DatabaseName,'/',pipeline().parameters.Command,'?api-version=2014-04-01-preview') That’s it! Now save your pipeline and you are ready to use it.

Summary

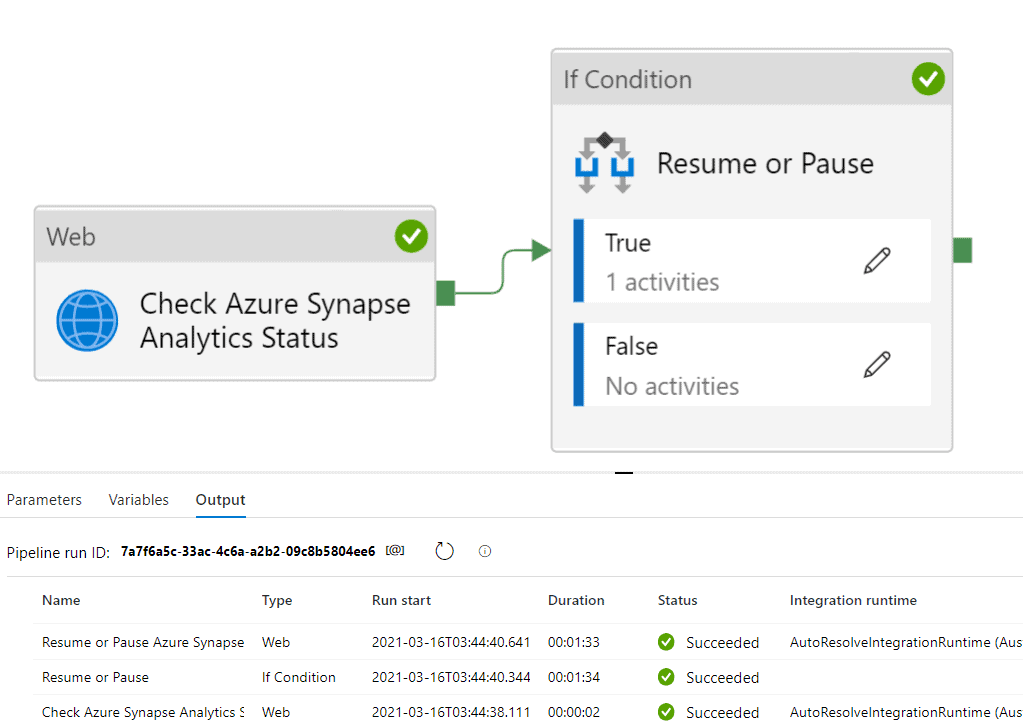

Today, we have had a look at Pausing and Resuming an Azure Synapse Analytics SQL Pool using integration pipelines in Synapse Workspaces or Azure Data Factory.

What’s Next?

In upcoming blog posts, we’ll continue to explore some of the features within Azure Data Services.

Please follow Tech Talk Corner on Twitter for blog updates, virtual presentations, and more!

As always, please leave any comments or questions below.

4 Responses

Rolly D

08 . 07 . 2021Granting SQL DB Contributor to the managed identity results in exactly the same error as you have listed and does not solve the problem.

David Alzamendi

08 . 07 . 2021Hi Rolly D,

I have tested again the “SQL DB Contributor” role and it works, for Synapse Workspaces dedicated SQL Pools you need to use the “Contributor” role. I have added 1 more screenshot to avoid confusions.

Let me know if you still have issues.

Regards,

David

Nauman

16 . 08 . 2021Thanks for the detailed steps. Even after giving the synapse workspace contribute permissions, I get the same error.

Anthony

09 . 12 . 2023David,

Thanks to your very detailed help I managed to successfully setup the appropriate jobs and more importantly bridge the gap between not knowing what to do and a better understanding of Azure Synapse pipelines.

Saludos y muchas gracias por la ayuda,

Anthony