Azure Data Factory copy activity logs help you save time building custom logging functionalities.

During the past few years, I’ve been designing and implementing data movement frameworks. Logging is an important characteristic to consider when designing these frameworks. Azure Data Factory allows me to choose if I want to use the native logging within the copy activity instead of implementing this functionality myself.

Table of Contents

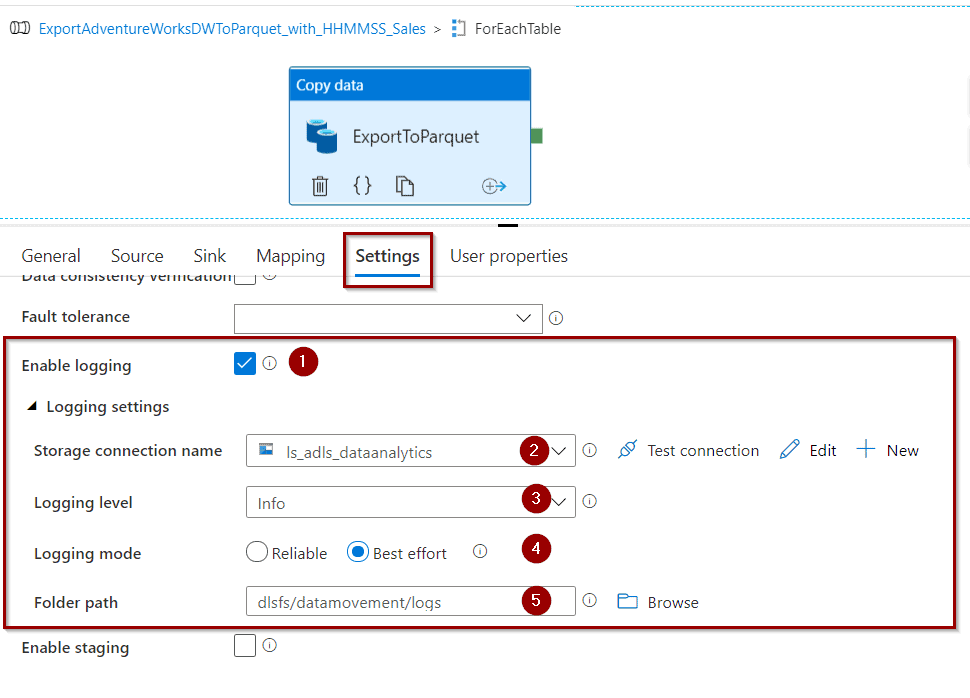

Enabling Azure Data Factory Copy Activity Logs

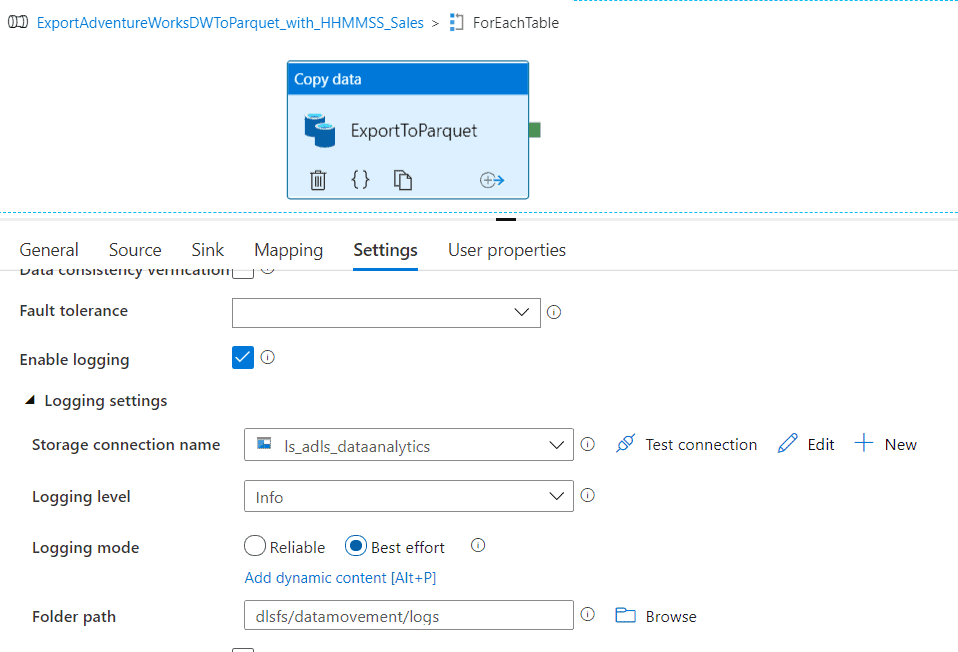

First, to enable this function, go to your copy activity. In the Settings section, click “Enable logging.”

- Enable / Disable Azure Data Factory copy activity logging

- Select the storage account where you want to store the logs

- Choose the logging level

- Info level – log all copied files, skipped files and skipped rows

- Warning level – log skipped files and skipped rows only

- Select the logging mode

- Reliable mode – flush logs immediately once data is copied to the destination

- Best effort mode – flush logs with batch of records

- Choose the folder to store the logs

Testing Azure Data Factory Copy Activity Logs

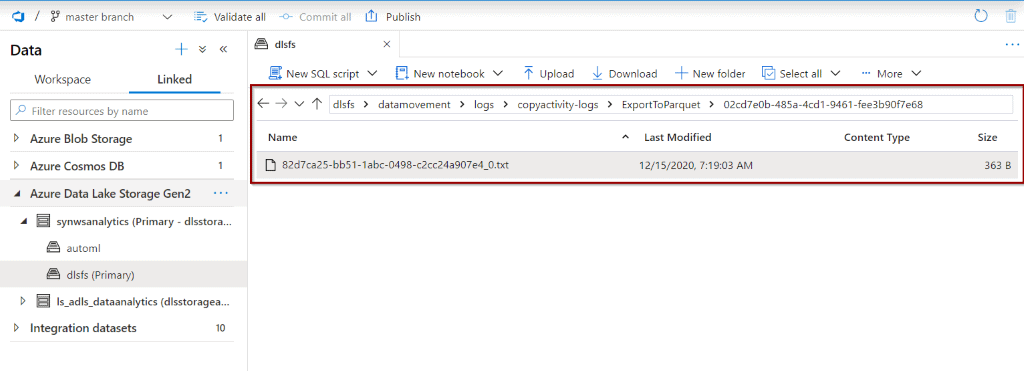

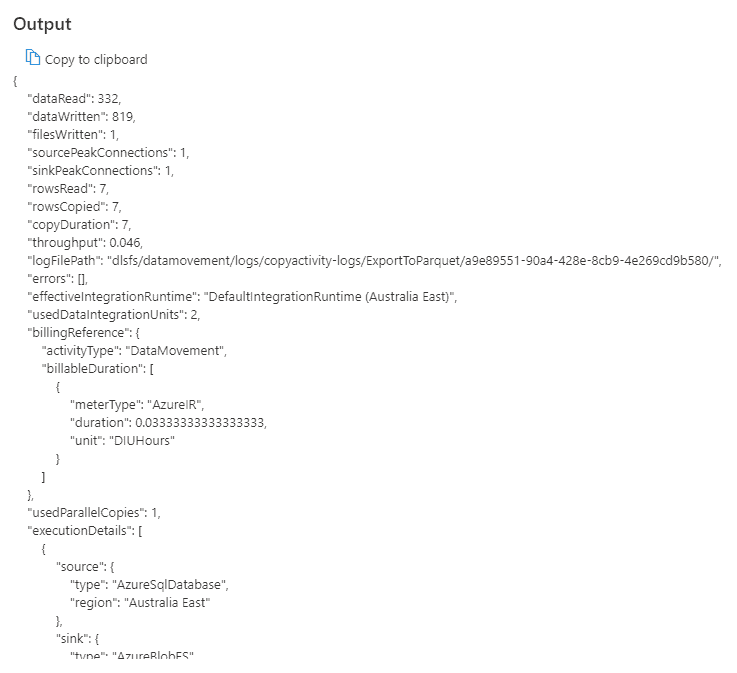

Next, once you’ve configured the logs, trigger the pipeline to start capturing logs.

Surprisingly, the folders where the files are being stored don’t include the pipeline name.

/{Logs Folder}/copyactivity-logs/{Copy Activity Name}/{Run Id}/ The files extension is txt, but they are delimited files (CSV).

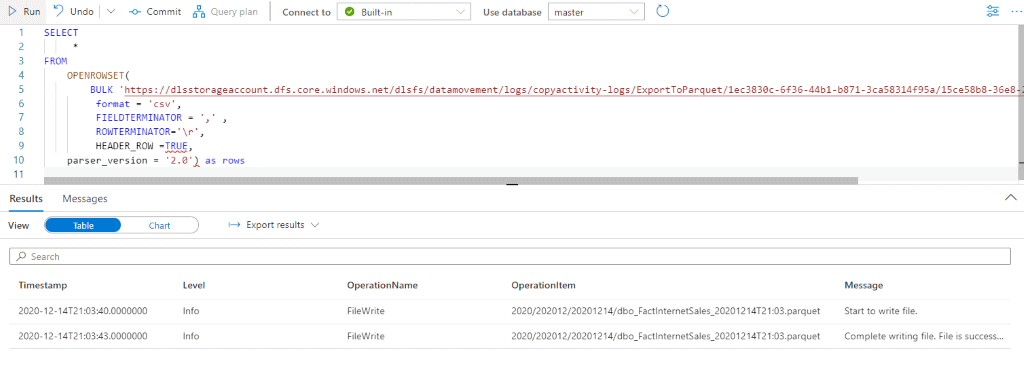

Reading Azure Data Factory CSV files using Azure Synapse Analytics

To read the files, you can use Azure Synapse Analytics Serverless. Because they are .txt extension files, you need to copy the following lines of code. You cannot use the menu context.

SELECT

*

FROM

OPENROWSET(

BULK 'https://dlsstorageaccount.dfs.core.windows.net/dlsfs/datamovement/logs/copyactivity-logs/ExportToParquet/1ec3830c-6f36-44b1-b871-3ca58314f95a/15ce58b8-36e8-251a-874a-b450fef98815_0.txt',

format = 'csv',

FIELDTERMINATOR = ',' ,

ROWTERMINATOR='\r',

HEADER_ROW =TRUE,

parser_version = '2.0') as rows Example:

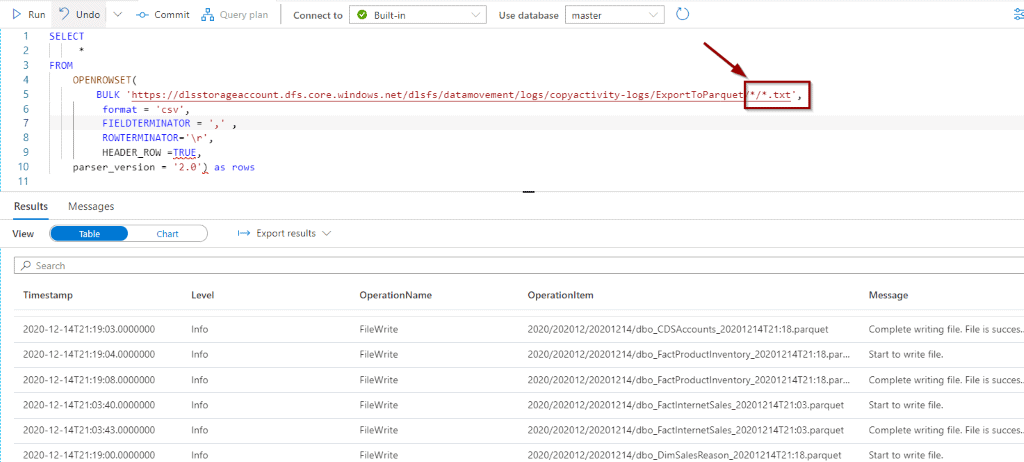

By using wildcards, you can read all the files.

SELECT

*

FROM

OPENROWSET(

BULK 'https://dlsstorageaccount.dfs.core.windows.net/dlsfs/datamovement/logs/copyactivity-logs/ExportToParquet/*/*.txt',

format = 'csv',

FIELDTERMINATOR = ',' ,

ROWTERMINATOR='\r',

HEADER_ROW =TRUE,

parser_version = '2.0') as rows Example:

Final Thoughts

In the end, I like this function because it allows me to store logs without building additional logic within the data movement. I’d like to see an additional option to store the following information in logs as part of this feature.

Summary

In summary, you’ve learned how to store copy activity logs within Azure Data Factory. You also learned how to query these files using Azure Synapse Analytics.

What’s Next?

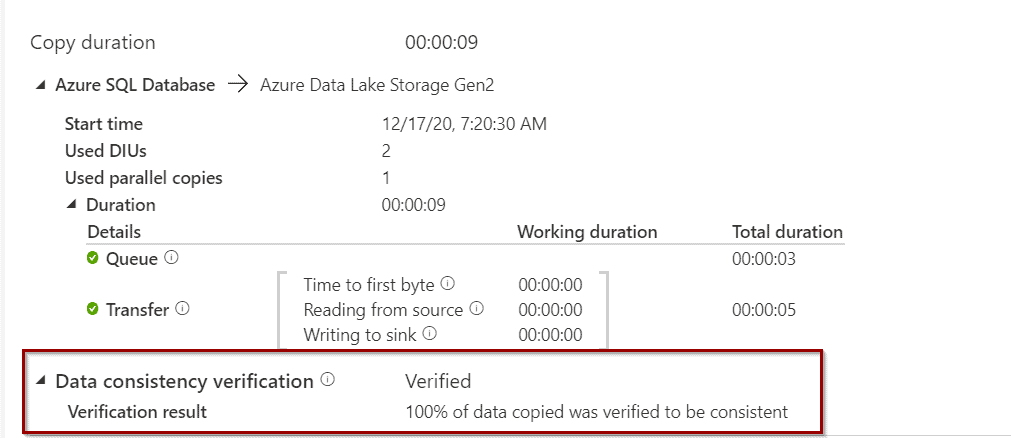

In my next blog post, we’ll have a look at Azure Data Factory Data Consistency Verification to validate the integrity of data movement.

Please follow Tech Talk Corner on Twitter for blog updates, virtual presentations, and more!

If you have any questions, please leave a comment below!

1 Response

Manish

23 . 05 . 2021Enabled logging with Info Level and Best effort in Copy activity, Source is a file on ADLS and target is synapse analytics. The file got created in log folder but it just has headers without any data. My ADF Managed Identity is a storage blob data contributor in ADLS.